Chapter 15 Interpreting Laboratory Tests

Clinical decision making using diagnostic laboratory testing is based on the assumption that a given test is accurate and precise. Diagnostic test accuracy is the ability of a test to distinguish patients with a disease from those who are disease free (Leeflang et al., 2008). Test accuracy is not necessarily fixed; accuracy may vary among patient populations and with different clinical conditions. Precision is a measure of the reproducibility of a test measurement when the same specimen is rechecked under the same circumstances. Sources of imprecision include biologic variability and analytic variability. Biologic variability is the variation in a test result in the same person at different times because of physiologic processes, constitutional factors, and extrinsic factors (McClatchey, 2002) (Table 15-1). Analytic variation refers to the variation in repeated tests on the same specimen and relates to analytic technique and specimen processing. With current technology, biologic variation plays a larger role than analytic variation in most laboratory tests.

Table 15-1 Biologic Variables that Affect Test Results

| Biologic Rhythms |

| Circadian |

| Ultradian |

| Infradian |

| Constitutional Factors |

| Age |

| Gender |

| Genotype |

| Extrinsic Factors |

| Posture |

| Exercise |

| Diet: caffeine |

| Drugs and pharmaceuticals: oral contraceptives |

| Alcohol use |

| Pregnancy |

| Intercurrent illness |

From Holmes EA. The interpretation of laboratory tests. In McClatchey KD (ed). Clinical Laboratory Medicine, 2nd ed. Philadelphia, Lippincott–Williams & Wilkins, 2002, p 98.

The Concept of “Normal”

The current standard of comparison for laboratory results is the reference range, which is frequently defined by results that are between chosen percentiles (typically the 2.5th to 97.5th percentiles) in a healthy reference population. Several problems are encountered when deriving a reference range. Often, the reference population is not representative of persons being tested. Differences in gender, age distribution, race, ethnicity, or the setting (hospitalized vs. ambulatory patients) between the reference population and the person receiving the test may be present. The person being tested should be tested under similar physiologic conditions (e.g., fasting, sitting, resting) as the reference population. The size of the reference population may be too small to include a representative range of the population.

Reference ranges for a particular test can be the manufacturer’s suggested reference range or may be modified because of differences in the population using the laboratory. The Clinical Laboratory Improvement Act of 1998 (CLIA) has defined three requirements for reference values: the normal or reference ranges must be made available to the ordering physician; the normal or reference ranges must be included in the laboratory procedure manual; and the laboratory must establish specifications for performance characteristics, including the reference range, for each test before reporting patient results. Using the manufacturer’s reference range is valid when the analytic processing of the test is the same as that done by the manufacturer and when the population being tested is similar to the reference population used to define the reference range. When selecting a reference range that includes 95% of the test results, 5% of the population will fall outside the reference range for a single test. When more than one test is ordered, the probability increases that at least one result will be outside the reference range. Table 15-2 compares the number of independent tests ordered with the probability of an abnormal result being present in healthy persons.

Table 15-2 Probability that a Healthy Person Will Have an Abnormal Result with Multiple Tests

| Number of Independent Tests | Probability of Abnormal Result (%) |

|---|---|

| 1 | 5 |

| 2 | 10 |

| 5 | 23 |

| 10 | 40 |

| 20 | 64 |

| 50 | 92 |

| 90 | 99 |

| Infinity | 100 |

From Burke MD. Laboratory tests: basic concepts and realistic expectations. Postgrad Med 1978;63:55.

Evaluating a Test’s Performance Characteristics

Given that tests are not totally accurate or precise, one must have a way to quantify these shortcomings. A test’s ability to discriminate diseased from nondiseased persons is defined by its sensitivity, specificity, and positive and negative predictive values. Table 15-3 shows how each is calculated. Sensitivity and specificity are inherent technical aspects of a test and are independent of the prevalence of disease in the population tested. However, given that diseases have a spectrum of manifestations, sensitivity and specificity are improved if the population is heavily weighted with patients who have advanced (vs. early) illness.

Table 15-3 Diagnostic Test Performance Characteristics

| Finding | Disease Present | Disease Absent |

|---|---|---|

| Test positive | True positive (TP) | False positive (FP) |

| Test negative | False negative (FN) | True negative (TN) |

Sensitivity = TP/(TP + FN); Specificity = TN/(TN + FP).

Positive predictive value = TP/(TP + FP); Negative predictive value = TN/(TN + FN).

Separating Diseased from Disease-Free Persons

Under ideal circumstances, sensitivity and specificity approach 100%. In reality, they are lower. The best currently available test to decide who is diseased or disease-free could be imperfect and have sensitivities and specificities in the 80% range. Moreover, discrepancies between a test’s efficacy and its effectiveness are common. Efficacy is a test’s performance under ideal conditions, whereas effectiveness is its performance under usual circumstances. Tests under development are evaluated under highly rigorous criteria, but in clinical practice, inadvertent error can be introduced into the technical performance or interpretation of the test results. Also, test values for the diseased and disease-free populations overlap.

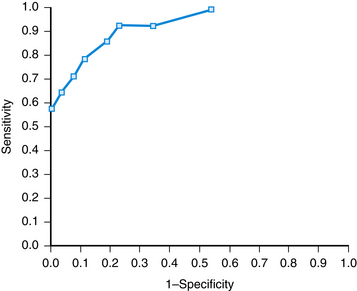

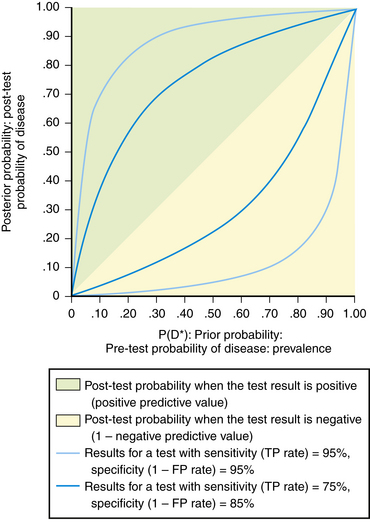

A cutoff value may be chosen to separate “normal” from abnormal (Figure 15-1). This decision is arbitrary and involves selecting a balance between sensitivity and specificity. The receiver operating characteristic (ROC) curve is a graphic analysis used to identify a cutoff that minimizes false-positive and false-negative results (Figure 15-2). The sensitivity and specificity are calculated for a number of cutoff values, with the variables 1-Specificity plotted on the x axis and Sensitivity plotted on the y axis. Each point on the curve represents a cutoff for the test. A perfect test would have a cutoff that allowed both 100% sensitivity and 100% specificity. This would be a point at the upper-left corner of the graph. The most efficient cutoff for a single test is the one that gives the most correct results, represented by the value that plots nearest to the upper-left corner of the graph.

Figure 15-1 Effect of changing a test’s cutoff value on disease classification.

(Modified from Cebul RD, Beck LH. Teaching Clinical Decision Making. Westport, Conn, Praeger, 1985, p 4.)

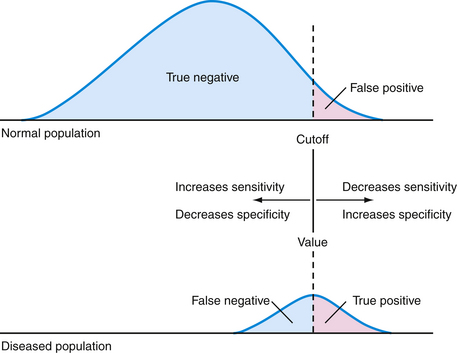

The predictive value of a test is directly related to the pretest probability of disease. When the prevalence of disease is high in the population, a positive test result is expected and a negative result is not expected, because the disease is common. Similarly, when the prevalence is low, a negative test result is anticipated because few people have the disease. These characteristics of predictive value become clinically useful when one compares the outcome of a positive or negative test result with the pretest probability of disease (Figure 15-3). Prevalence (pretest probability of disease) is plotted against predictive value for a positive and negative test. Note that a test result loses its ability to discriminate those who have disease from those who do not at the extremes of prevalence. If disease probability is low, a positive or negative result does not change the post-test probability much—it is still low. On the other hand, if disease probability is high, the post-test results, whether positive or negative, do not substantially alter an already high probability of disease being present. The predictive value has the greatest power to discriminate those with disease from those who are disease free in the mid-pretest probability range, near 50%. A positive test result suggests a higher post-test probability of disease than a negative result.

Multiple Test Ordering

Considerations for Ordering Tests

The following section presents an overview of 40 commonly ordered tests. Each section discusses the physiologic significance of the test, a typical range of reference values, and a listing of some common disease states that might explain an abnormal result. The reference ranges for each test are intended as guides and may differ from the reference ranges used by different laboratories, depending on the reference population and the test methodology.

Albumin

Albumin is a transport protein that is produced mainly in the liver and maintains osmotic pressure. Albumin has a long half-life (20 days) and a small (~5%) daily turnover. In humans, albumin levels rise from birth up to age 1 year, thereafter remaining stable at approximately 3.5 to 5.5 grams per deciliter (g/dL) throughout adult life. Albumin levels are reduced with advancing liver disease, nephrotic syndrome, protein-losing enteropathy, malnutrition, and some inflammatory diseases (Table 15-4). Elevations of serum albumin are unusual except in dehydration.

Table 15-4 Causes of Decreased Albumin Levels

| Reduced Absorption |

| Malabsorption |

| Malnutrition |

| Decreased Synthesis |

| Chronic liver disease |

| Protein Catabolism |

| Infection |

| Hypothyroidism |

| Burns |

| Malignancy |

| Chronic inflammation |

| Increased Losses |

| Nephrotic syndrome |

| Cirrhosis |

| Protein-losing enteropathies |

| Hemorrhage |

| Dilutional |

| Syndrome of inappropriate antidiuretic hormone secretion (SIADH) |

| Intravenous hydration |

Alkaline Phosphatase

Alkaline phosphatase (ALP) is found in a wide variety of tissues, including the liver, bone, intestine, and placenta. The reference value for ALP depends on age and gender, with higher levels in childhood, adolescence, and pregnancy. A typical reference range in an adult is 25 to 100 U/L. In adults, the source of an elevated ALP is the liver, bone, or medication (Table 15-5). Typically, hepatic elevations of ALP are suggestive of cholestatic liver disease or biliary tract dysfunction. Mild ALP elevations (one to two times above reference range) can occur with parenchymal liver disease, such as hepatitis or cirrhosis. Marked ALP elevations occur with infiltrative liver disease or biliary obstruction, intrahepatic or extrahepatic. A persistently elevated ALP level can be an early sign of primary biliary cirrhosis. In cholestatic liver disease, bilirubin and gamma-glutamyltransferase (GGT) levels are increased as well, with less prominent elevations in aminotransferase levels. To confirm a hepatic source of an elevated ALP level, one can simultaneously measure GGT, which is elevated in obstructive liver disease but not with bone disease. Imaging studies of the liver, by sonography or computed tomography (CT), can define an anatomic basis for obstruction in the setting of an elevated ALP level of hepatic origin.

Table 15-5 Causes of Increased Alkaline Phosphatase Levels

| Bone Origin |

| Paget’s disease |

| Osteomalacia |

| Rickets |

| Hyperparathyroidism |

| Metastatic disease |

| Liver Origin |

| Extrahepatic biliary obstruction |

| Pancreatic cancer |

| Biliary cancer |

| Common bile duct stone |

| Intrahepatic obstruction |

| Metastatic liver disease |

| Infiltrative diseases |

| Hepatitis |

| Primary biliary cirrhosis |

| Sclerosing cholangitis |

| Cirrhosis |

| Passive hepatic congestion |

| Other Causes |

| Drugs |

| Phenobarbital |

| Phenytoin |

| Chlorpropamide |

| Hyperthyroidism |

| Temporal arteritis |

Aminotransferases

Liver chemistry tests are widely used to assess hepatic function. Common markers of hepatocellular damage are the aminotransferases, aspartate aminotransferase (AST) and alanine aminotransferase (ALT). While AST is also found in other tissues, such as the heart, blood, and skeletal muscle, ALT is more specific for liver. The aminotransferases are released by hepatocytes with cell injury or death. The reference range is approximately 10 to 40 U/L for AST and 15 to 40 U/L for AST. The magnitude of the elevation of aminotransferases and the ratio of AST to ALT can help suggest the cause of liver disease. Mild elevation (<5 times the upper limit of normal) of the ALT or AST, with ALT > AST, is frequently found with chronic liver disease, including chronic viral hepatitis, fatty liver, and medications. Probably the most common cause of persistently elevated unexplained aminotransferases is fatty infiltration of the liver. Less common causes of mildly elevated aminotransferases with ALT > AST include autoimmune hepatitis, hemochromatosis, alpha-1 antitrypsin disease, Wilson’s disease, metastatic disease, and cholestatic liver disease. Mild aminotransferase elevations with AST > ALT are more suggestive of alcohol-related liver disease, but can also occur with cirrhosis and fatty liver. With alcoholic hepatitis, AST levels typically are approximately twice ALT levels, but the AST levels rarely are greater than 300 U/L. Marked elevations (greater than 15 times upper limit of normal) of AST and ALT suggest significant necrosis, such as seen in acute viral or drug-induced hepatitis, in ischemic hepatitis, or as can occur with acute biliary obstruction (Green and Flamm, 2002). However, the magnitude of elevation of the aminotransferases does not necessarily correlate with the severity of underlying liver disease or the prognosis. In fact, normal or minimally elevated aminotransferases may be seen in patients with end-stage liver disease. When AST is elevated without elevation of ALT, one should consider extrahepatic causes, particularly myocardial or skeletal muscle sources. When AST and ALT are elevated approximately the same, a hepatic origin is most likely. Table 15-6 compares the differences in liver function tests between hepatocellular and obstructive disorders.

Table 15-6 Pattern of Liver Function Elevation

| Test | Hepatocellular Disorders | Obstructive Disorders |

|---|---|---|

| Bilirubin | + | ++ |

| Aminotransferases | +++ | + |

| Alkaline phosphatase | + | ++ |

| γ-Glutamyltransferase | + | ++ |

| Albumin | Decreased | Normal |

Amylase and Lipase

Pancreatic disease, particularly acute pancreatitis, is often associated with elevations in amylase and lipase. Table 15-7 lists common causes of elevated amylase and lipase. Lipase levels have greater sensitivity and specificity for pancreatic disease than amylase levels. Because there are many different assays for amylase and lipase, with different reference ranges, physicians should consult their laboratory’s reference range to determine their upper limits of normal. Amylase and lipase values increase 3 to 6 hours after the onset of acute pancreatitis, both peaking at approximately 24 hours. Amylase levels fall to normal in 3 to 5 days; lipase levels return to normal in 8 to 14 days. Because of exocrine insufficiency caused by recurrent pancreatitis, amylase levels tend to be lower when alcohol is the cause of pancreatitis, as opposed to gallstone or drug-induced pancreatitis. Pancreatitis is likely when the amylase is elevated to three times the upper limit of normal. When lipase levels are more than five times normal, pancreatitis is virtually always present. A normal amylase value, however, does not exclude pancreatitis, especially when induced by hypertriglyceridemia.

Table 15-7 Causes of Elevated Amylase and Lipase Levels

| Amylase | Lipase |

|---|---|

| Pancreatic Diseases | |

| Nonpancreatic Diseases | |

Antinuclear Antibodies

Antinuclear antibodies (ANAs) are autoantibodies against parts of the cell’s nucleus. Combined with clinical features, ANA testing can help diagnose certain collagen vascular disorders (Table 15-8). The likelihood that an ANA test will help with diagnosis depends on the pretest probability of disease. ANA tests are reported as negative (no staining) or positive at the highest cutoff of dilution of the serum that shows immunofluorescent nuclear staining. If positive, the description of the pattern is noted. When the ANA test is positive, testing for specific nuclear antigens should be guided by the clinical findings.

Table 15-8 Conditions Associated with Positive Antinuclear Antibody (ANA) Test

| ANA very useful for diagnosis |

| Systemic lupus erythematosus |

| Systemic sclerosis |

| ANA somewhat useful for diagnosis |

| Sjögren’s syndrome |

| Polymyositis-dermatomyositis |

| ANA very useful for monitoring or prognosis |

| Drug-associated lupus |

| Mixed connective tissue disease |

| Autoimmune hepatitis |

| ANA not useful or has no proven value for diagnosis, monitoring, or prognosis |

| Rheumatoid arthritis |

| Multiple sclerosis |

| Thyroid disease |

| Infectious diseases |

| Idiopathic thrombocytopenia purpura |

| Fibromyalgia |

From Solomon DH, Kavanaugh AJ, Schur PH, et al. Evidence-based guidelines for the use of immunologic tests: antinuclear antibody testing. Arthritis Rheum 2002;47:434-444.

Bilirubin

Probably the most common cause of unconjugated hyperbilirubinemia is Gilbert’s syndrome, a benign condition that affects up to 5% of the population. In Gilbert’s syndrome, only the unconjugated bilirubin is elevated; the rest of the liver enzymes are normal. Other causes of unconjugated hyperbilirubinemia include hemolysis, ineffective erythropoiesis (as in megaloblastic anemias), or a recent hematoma. With normal hepatic function, hemolysis is not associated with bilirubin levels greater than 5 mg/dL. In an asymptomatic person with mildly elevated unconjugated hyperbilirubinemia (<4 mg/dL), a presumptive diagnosis of Gilbert’s syndrome can be made if there are no medications that cause elevated bilirubin, there is no evidence of hemolysis, and the liver enzymes are normal (Green and Flamm, 2002). Conjugated hyperbilirubinemia generally occurs with defects of hepatic excretion, including extrahepatic obstruction, intrahepatic cholestasis, cirrhosis, hepatitis, and toxins. Bilirubinuria is a fairly sensitive marker for biliary obstruction and may occasionally be found before jaundice is evident.

Calcium

The etiology of hypercalcemia is either hyperparathyroidism or malignancy in more than 90% of hypercalcemic patients. In the ambulatory setting, most patients with hypercalcemia have hyperparathyroidism. Typically the hypercalcemia of hyperparathyroidism is modest, with calcium levels less than 11 mg/dL and minimal symptoms. Hospitalized patients are more likely to have malignancy as a cause of hypercalcemia. Calcium levels greater than 13 mg/dL are usually associated with malignancy. Intact parathyroid hormone (PTH, parathormone) levels can differentiate hyperparathyroidism from other causes of hypercalcemia. Nonhyperparathyroid causes of hypercalcemia will give low or “normal” intact PTH levels in a setting of hypercalcemia, whereas the PTH level will be increased in hyperparathyroidism. Occasionally, patients with a family history of hypercalcemia show a reduction in calcium excretion and have familial hypocalciuric hypercalcemia. Other causes of hypercalcemia are related to increased gastrointestinal (GI) absorption, increased bone resorption, and decreased renal excretion (Table 15-9).

Table 15-9 Causes of Calcium Abnormalities

| Hypercalcemia |

| Hyperparathyroidism (primary and secondary) |

| Malignancies: breast, lung, prostate, renal, myeloma, T-cell leukemia, lymphoma |

| Drugs |

| Thiazide diuretics |

| Milk-alkali syndrome |

| Vitamin D intoxication |

| Granulomatous diseases |

| Sarcoidosis |

| Tuberculosis |

| Chronic renal failure |

| Immobilization |

| Hyperthyroidism |

| Hypocalcemia |

| Hypomagnesemia |

| Hypoparathyroidism |

| Malabsorption of calcium or vitamin D |

| Acute pancreatitis |

| Rhabdomyolysis |

| Hyperphosphatemia |

| Chronic renal failure |

| Transfusion of multiple units of citrated blood |

| Drugs |

| Loop diuretics |

| Phenytoin |

| Phenobarbital |

| Cisplatin |

| Gentamicin |

| Pentamidine |

| Ketoconazole |

| Calcitonin |

Carcinoembryonic Antigen

Carcinoembryonic antigen (CEA), an oncofetal glycoprotein antigen, has been mainly used in the evaluation of patients with adenocarcinomas of the GI tract, especially colorectal cancer. CEA may be elevated in benign as well as malignant diseases (Table 15-10). CEA is not recommended as a screening test for occult cancer (including colorectal) because of its low sensitivity and specificity, but it may be used as supportive evidence in a patient undergoing diagnostic evaluation because of signs and symptoms of colon cancer. Its main value is in monitoring for persistent, metastatic or recurrent colon cancer after surgery. A preoperative elevation should return to normal in 6 to 12 weeks (CEA half-life, 2 weeks), if all disease has been resected. The liver metabolizes CEA, and therefore hepatic diseases can result in delayed clearance. Treatment (surgery, radiation, chemotherapy) may produce transient artifactual elevations. CEA has a 97% sensitivity for detecting recurrence in the patient whose postoperative CEA value has returned to normal, and 66% sensitivity for recurrence in the patient with normal preoperative levels.

Table 15-10 Conditions Associated with Elevated Carcinoembryonic Antigen (CEA) Level

| Disease | Patients with Elevated CEA (%) |

|---|---|

| Carcinoma of entodermal origin (colon, stomach, pancreas, lung) | 60-75 |

| Colon cancer | |

| Overall | 63 |

| Dukes Stage A | 20 |

| Dukes Stage B | 58 |

| Dukes Stage C | 68 |

| Lung cancer | |

| Small cell carcinoma | About 33 |

| Non–small cell carcinoma | About 67 |

| Carcinoma of nonendodermal origin (e.g., head and neck, ovary, thyroid) | 50 |

| Breast cancer | |

| Metastatic disease | ≥50 |

| Localized disease | About 25 |

| Acute nonmalignant inflammatory disease, especially gastrointestinal tract (e.g., ulcerative colitis, regional enteritis, diverticulitis, peptic ulcers, chronic pancreatitis) | Variable |

| Liver disease (alcoholic cirrhosis, chronic active hepatitis, obstructive jaundice) | Variable |

| Renal failure, fibrocystic breast disease, hypothyroidism | Variable |

| Healthy persons | |

| Nonsmokers | 3 |

| Smokers | 19 |

| Former smokers | 7 |

Chloride

Chloride is the most abundant extracellular anion. Measurements of serum chloride are not useful for routine screening but may help in the evaluation of acid-base disturbances. The reference range of chloride is 98 to 109 mmol/L. In volume expansion, serum chloride generally increases, and in volume depletion, serum chloride is reduced. Hypochloremia occurs with loss of chloride-containing body fluids, such as with prolonged vomiting, burns, diuretic use, and salt-wasting nephropathy. Hypochloremia is commonly seen with metabolic alkalosis. Hyperchloremia occurs with non–anion gap metabolic acidosis, usually related to diarrhea or renal tubular acidosis, and with administration of large amounts of sodium chloride.

Coagulation Studies

When monitoring heparin therapy, the most widely used target for anticoagulation is a PTT 1.5 to 2.5 times the upper limit of normal. Now, however, because of the great variation in thromboplastins used in different PTT assays, PTT results vary widely among laboratories. Therapeutic heparin levels, as measured by antifactor Xa units, are approximately 0.3 to 0.7 antifactor Xa IU/mL. With plasma concentrations of heparin at 0.3IU/mL, investigators have found that mean PTT values ranged from 48 to 108 seconds, depending on the laboratory methods used. The American College of Chest Physicians recommends against the use of a fixed PTT therapeutic range for the treatment of venous thrombosis; instead, they recommend that each laboratory determine the PTT range that corresponds to a therapeutic heparin level: 0.3 to 0.7 IU/mL by factor Xa dilution. Anti–factor Xa levels may also be used to monitor appropriate anticoagulation doses in patients with obesity or renal failure, because these groups are more likely to be over-anticoagulated using weight-based heparin dosing (Hirsch et al., 2008).

Stay updated, free articles. Join our Telegram channel

Full access? Get Clinical Tree