History and Background

The importance of infusing scientific research into medical education and clinical care was emphasized in the Flexner Report, published in 1910. As Abraham Flexner noted: “If the sick are to reap the full benefit of recent progress in medicine, a more uniformly arduous and expensive medical education is demanded” (

1). This observation coincided with numerous scientific breakthroughs that led to repudiation of folk treatments, such as bleeding and purging, in favor of treatments based on scientific method. To meet new challenges, modern medicine developed education standards that emphasized clinical practice and scientific research (

2). However, with this innovation, research and clinical practice remained somewhat isolated in separate realms until the emergence of evidence-based medicine.

Evidence-based medicine bridges the gap between clinical practice and research and provides a method to systematically select and apply research findings as part of the clinical decision-making process. Using principles and methods derived from clinical epidemiology, the founders of evidence-based medicine sought to ground medical decision making in rigorous scientific evidence to invalidate previously accepted but ineffective diagnostic tests, prognostic protocols, and clinical treatments and replace them with new, more powerful, accurate, efficacious, and safer ones (

3).

Evidence-based medicine offers a framework and objective methods for making clinical decisions by embedding research in the clinical decision-making process. Consequently, evidence-based medicine promotes the translation of research into practice and provides a mechanism for insuring that research evidence informs clinical decisions (

4).

Over the last decade evidence-based medicine attracted considerable attention because it meets an identified need. As noted in the 1990 Institute of Medicine report,

Crossing the Quality Chasm, an evidence-based approach to clinical practice provides a sound method for making clinical decisions; reduces idiosyncratic variations in practice; and bridges the gap between knowledge and practice (

5).

Despite the potential benefits, there are concerns about the movement towards evidence-based medicine. A literature survey of 47 articles published from 1966 to 1999 noted the most commonly cited barriers to evidence-based medicine: the need to develop new skills; difficulty applying evidence to the care of individual patients; and limited time and resources (

6). Over the last 10 years, an explosion of practice resources to support evidence-based practice, which are available via the Internet (

Tables 80-1 and

80-2), addressed some of the perceived barriers. These web-based resources provide clinicians with evidence-based practice background information, references, and self-study guides. Clinicians have easy access to evidence summaries and reviews that meet specific quality criteria. Many reviews also include a “clinical bottom-line” that summarizes the clinical importance of the evidence presented.

Criticisms of evidence-based medicine also include the following misperceptions: evidence-based medicine devalues clinical experience, ignores patient values and preferences, and is a cookbook approach to medicine (

6). However, these misperceptions do not reflect the true spirit of evidence-based medicine. Dr. David Sackett, one of the founders of evidence-based medicine, states: “Evidence-based medicine is the conscientious, explicit, and judicious use of current best evidence in making decisions about the care of individual patients” (

7). Evidence-based medicine does not blindly adhere to specific criteria for patient care. Rather, it serves as an adjunct to clinical decision-making. Finally, evidence-based medicine is not limited to use of randomized controlled trials. Evidence-based decision making emphasizes finding the

best available evidence. The evidence is then critically appraised and the relative strengths and weaknesses are considered before applying the findings to clinical practice.

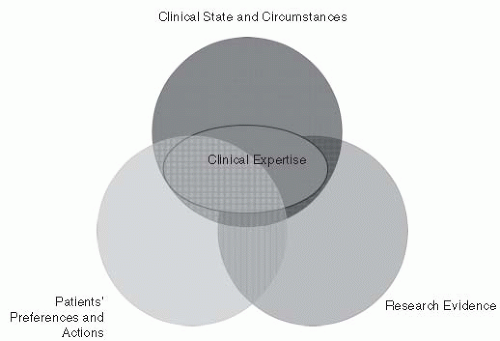

Evidence-based decisions are defined as the integration of best research evidence with clinical expertise and patient values (

7). A model for evidence-based clinical decisions emphasizes three elements—

clinical state and circumstances, patient preferences and actions, and

research evidence—along with the fourth element,

clinical expertise, that overlies the other three

(

Fig. 80-1) (

8). This model recognizes the important role that clinical expertise plays in the clinical decision-making process. Clinical expertise is required to size up the patient’s clinical state, communicate with patients to determine their preferences, and determine how to best apply research findings to the individual patient (

8).

Challenges to Adopting an Evidence-Based Approach in Rehabilitation Practice

There are several challenges to adopting an evidence-based approach in rehabilitation. Evidence-based medicine was developed for use in general medicine and focuses on mortality or the presence or absence of disease. In contrast, rehabilitation medicine is a unique area of medicine that focuses on functioning, both as an outcome and an area for clinical assessment (

9). A unifying model for rehabilitation, developed based on the International Classification of Functioning Disability and Health (ICF) framework, defines rehabilitation as a health strategy that aims to help people “achieve and maintain optimal functioning in interaction with their environment” (

10). Because the focus of rehabilitation is so different from general medicine, it is often difficult to translate evidence-based medicine concepts into a framework that is useful for rehabilitation. Another problem is that evidence-based medicine requires

knowledge of clinical epidemiology (

11), a field that deals with the solution of clinical problems using epidemiologic methods (

12). Few rehabilitation professionals are trained in clinical epidemiology and must acquire evidence-based medicine skills independently through continuing education or self-study. These issues notwithstanding, the evidence-based medicine model has much to offer rehabilitation.

Over the last decade evidence-based medicine has been widely adopted by health care disciplines and is now commonly referred to by a more generic term—evidence-based practice. It is important that the rehabilitation field considers ways to infuse the principles of evidence-based practice into clinical practice. This chapter has the following aims: inspire rehabilitation professionals to adopt an evidence-based approach; help translate evidence-based medicine into terminology that is meaningful to the rehabilitation field; and provide strategies to implement an evidence-based practice approach to clinical practice.

The Evidence-Based Practice Process

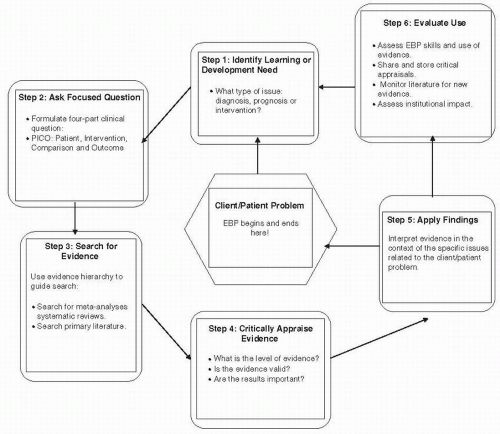

Figure 80-2 presents a schematic diagram of the steps involved in the evidence-based practice process. As the figure

illustrates, evidence-based practice is patient focused. The first five steps in the evidence-based practice—identifying the learning or development need, asking a focused question, searching for evidence, applying research evidence, and critically appraising evidence—begin and end with the patient. A sixth step, evaluating the use of evidence, adds a quality improvement component to the process. Clinicians interested in adopting an evidence-based approach will find a variety of resources on Internet.

Table 80-2 summarizes Internet resources that serve as a guide to each step of the evidence-based practice process.

Step 1: Identify Learning or Development Need

The first step in the evidence-based practice process is to identify the learning issue and select the category of research evidence appropriate for the issue. For example, the learning issue may be uncertainty about which tests or clinical examination findings to consider in determining the presence or absence of a disease, impairment, or disorder—evidence related to diagnosis. A learning issue may arise when the patient inquires about the ability to return to work or the family inquires about the likelihood of a patient being able to return to home or to her/his previous level of functioning—evidence related to prognosis. Questions about other rehabilitation interventions that may yield a better outcome present another type of learning issue—evidence related to intervention. For each category of evidence—diagnosis, prognosis, or intervention—it is important to understand specific evidence-based practice concepts and terms.

Step 2: Ask a Focused Question

Once the learning issue and the appropriate category of research—diagnosis, prognosis, or intervention—are identified, a focused clinical question is formulated. A clinical question consists of four parts: patient, intervention, comparison, outcome (PICO).

Table 80-3 provides an example of the four-part clinical question. Clinical questions are constructed for each category of evidence. For example, a question related to diagnosis is framed as: For patients with shoulder pain, can clinical tests, compared to arthroscopy identify patients with rotator cuff tears? A question related to prognosis is posed: For patients with a quadriceps contusion injury, can clinical tests predict the time to return to sports? And a question related to intervention is structured: For elderly individuals with chronic disease, can a strength training exercise program reduce disability? Thus, a well-constructed clinical question establishes a link between patient problems, research evidence, and the clinical decision.

Step 3: Search for Research Evidence

Medicine has witnessed tremendous growth in research over the last century and this growth is particularly evident in the volume of available information. Each year Medline indexes over 560,000 new articles and the Cochrane Center adds 20,000 new trials. The volume of information presents a challenge because published research includes many preliminary or exploratory studies. Knowledge that has been rigorously tested and is of sufficient importance to influence practice represents only a small fraction of the published research (

13). Thus, we do not suffer from a lack of information but from an inability to efficiently process and organize the plethora of available information. Evidence-based practice provides useful strategies for the daunting task of keeping up-to-date on research literature. There are three strategies for conducting an effective and efficient search: (a) organize the search based on the hierarchy of research evidence quality; (b) use evidence-based practice search engines; and (c) search for evidence that has been summarized and rated for quality.

Research Evidence Hierarchy

Since the goal of evidence-based practice is to apply the best available evidence, the literature search begins by looking for the highest quality evidence. A key concept in evidence-based practice is that research evidence is not equal in value. Evidence-based practice ranks research evidence based on a hierarchy that considers the degree to which the study design reduces bias and threats to validity. Professional organizations and evidence-based practice work groups have devised specific guidelines for rating evidence (

14) that reflect the following values: controlled studies are ranked higher than uncontrolled studies; prospective studies are considered stronger than retrospective studies; and randomized studies are better than nonrandomized studies (

15). Based on quality considerations, research evidence is categorized on a scale from Level I to V: high quality research with strong methodology is rated at the highest level (Level I) and expert opinion is ranked at the lowest level of evidence (Level V). Studies with weaknesses in research design and implementation or large confidence intervals (CIs) are ranked as Level II to IV.

Systematic reviews play an important role in evidence-based practice. In contrast to traditional opinion-based literature reviews, systematic reviews use a specific protocol to identify, critique, and summarize relevant studies that address a similar clinical question. Bias is minimized in systematic reviews because a comprehensive and reproducible process for searching the literature is outlined. Systematic reviews also assess the methodological quality of studies (

16), which is defined as

“all aspects of a study’s design and conduct can be shown to protect against systematic bias, nonsystematic bias, and inferential error” (

17).

It is important to consider the type of research—diagnosis, prognosis, or intervention—when evaluating the strength of research evidence. For all types of evidence, a valid systematic review of quality research is the highest level of evidence while expert opinion is the lowest. However, for the other levels of evidence, the criteria for research quality are somewhat different for diagnosis, prognosis, or intervention studies. Evidence related to diagnosis compares the diagnostic test results to a “

gold” standard, which is generally an accepted proof of the presence or absence of the disease or disorder. Therefore, an important consideration for ranking evidence from diagnostic studies is to determine how the gold, or reference, standard is applied (

15). Studies where the gold or reference standard is consistently applied blindly or objectively to all subjects are ranked higher than studies where the reference standard is not consistently applied. Furthermore, studies that validate diagnostic test results from a previous study are ranked higher than exploratory studies (

14). Prognosis studies are concerned with examining the effect of patient characteristics on outcomes (

15). Inception or prospective studies are rated higher than retrospective studies or studies using untreated subjects from randomized controlled trials (

14). Intervention studies evaluate the effect of treatments on outcomes. Randomized controlled trials help to eliminate potential bias and studies using randomized controlled trials are rated higher than cohort and case-controlled studies.

Based on the hierarchy of research evidence, an efficient literature search strategy begins by looking for systematic reviews that summarize the results of many different studies and provide an overview of the body of research evidence that addresses a clinical question. If no appropriate systematic reviews are located, the search continues by focusing on specific well-designed individual studies until the best studies are located.

Search Engines

The evidence-based practice movement spawned the development of search engines designed to streamline the literature search process. Some search engines incorporate an evidence-based practice framework. The search is organized by the type of research evidence and the results are presented in order, based on the hierarchy of research evidence. One useful resource is SUMsearch, which is described as a “meta” search engine because it searches multiple databases. SUMsearch results are then organized according to the hierarchy of evidence. SUMsearch is a good starting point for conducting a literature search because it is an efficient strategy for viewing a summary of the available evidence in a specific area and it is especially effective for locating information on systematic reviews and guidelines (

18).

Databases of systematic reviews can also be searched directly. The Cochrane Collaboration, an international group dedicated to combining similar randomized trials to produce a more statistically sound evidence through systematic reviews, is one of the best resources for systematic reviews. Complete systematic reviews require a subscription, but abstracts of the reviews are available via a searchable database at Cochrane website. The Cochrane Library includes reviews for the top ten causes of disability in developed and developing nations and, therefore, it is an important resource for evidence-based rehabilitation (

19). Moreover, the Cochrane Collaboration includes the study groups dedicated to examining topics relevant to rehabilitation such as bone joint and muscle trauma, movement disorders, multiple sclerosis, musculoskeletal and neuromuscular disorders, and stroke (

20).

If the search fails to yield a relevant and valid systematic review or evidence summary, the next step is to search the primary literature. PubMed, a service of the U.S. National Library of Medicine and the National Institutes of Health, provides an extensive database of research abstracts (

21). PubMed is widely recognized as the best resource for information from original studies (

18). PubMed recently added an evidence-based medicine search filter termed “clinical queries.” This feature allows the user to conduct a search based on the category of evidence (diagnosis, prognosis, or therapy) and to request results that are “focused” to include a few of the most relevant studies (specific) or “expanded” to include a wider range of studies (sensitive).

Evidence Summaries

Another search strategy for busy clinicians is to search for evidence that has already been summarized and critiqued. The evidence-based practice movement fostered the development of secondary resources or “predigested” evidence summaries (see

Table 80-2). Evidence summaries help to address concerns that evidence-based practice demands too much time and requires research critiquing skills beyond the ability of most clinicians. One example, the Database of Abstracts of Reviews of Effects (DARE), is a free, searchable database that includes evidence summaries relevant to rehabilitation (

22). Each summary must meet specific quality criteria to be included in the DARE database. The evidence summary reviews key elements of the study and concludes with a critical commentary on the study. Discipline-specific evidence summaries are also available. The Physiotherapy Evidence Database (

23) comprises abstracts of evidence-based clinical practice guidelines, systematic reviews, and clinical trials. The methods for the clinical trials are reviewed and rated on a ten-point scale (

23). OTseeker is another database with abstracts of systematic reviews and clinical trials relevant to occupational therapy. The clinical trials are rated on an eight-point internal validity score and a two-point statistical reporting score (

24). Online journals, such as APC Journal Club and Evidence-Based Medicine, also summarize results of clinical trials. Secondary sources present clinically relevant research that has been critically appraised and provide an important resource for busy clinicians. However, it is still important to examine the source and consider the validity and accuracy of the research summary and appraisal. Evidence summaries streamline the evidence-based practice process by simplifying the critical appraisal of evidence, which is described in the next step.

Step 4: Critically Appraise the Evidence

Once the highest level of evidence for a specific clinical question is located, the evidence is critically appraised. The first item of the critical appraisal process is to assess the validity of the research evidence. Unfortunately, specific standards for determining the validity of a study have not been established and a proliferation of critical appraisal tools has led to considerable confusion. One systematic review identified 121 published critical appraisal tools. While the tools vary somewhat, the most frequently cited areas evaluated to establish the validity of research evidence for efficacy studies were: eligibility criteria, appropriate statistical analysis, random allocation of subjects, consideration of outcome measures used, sample size justification/power calculation, study design reported, and assessor blinding (

25). The critical appraisal process, which varies for different types of research evidence, is described in the following sections.

Systematic Reviews and Meta-Analyses

Similar to systematic reviews, meta-analyses use a specific protocol to locate and assess research articles addressing a specific question. However, meta-analyses go one step further by combining data to yield a summary statistic. The focus of the meta-analysis or systematic review should be clear with detailed information on the population, intervention and outcomes. Systematic reviews should meet criteria for establishing homogeneity. Specifically, the studies should be similar in terms of patient characteristics (e.g., age, type of disease/disorders), interventions used, outcomes measured, and the study methods (e.g., randomized trials, cohort studies) (

26). The search strategy used to identify research articles should include multiple search engines to ensure that all relevant studies are considered (

27). The process used to include studies should be outlined and the criteria used to evaluate studies clearly stated (

28). Each study should be evaluated in terms of the methodological quality, precision or the width of the CI around the result and the external validity, or the extent to which the results can be generalized (

27).

As the number of systematic reviews continues to grow, it is important to ensure that the information is up-to-date. Unfortunately, there is little research to support how and when to update systematic reviews, so it is important to consider if the information presented is still relevant (

29).