Fig. 7.1

Integration of the overall ‘home’ system

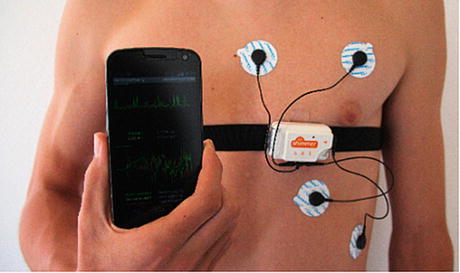

Fig. 7.2

ECG sensing with Shimmer2R

Since home-based rehabilitation may increase the risk of stroke re-occurrence we have decided to include EEG sensor, a 2 × 7-node Emotiv EPOC EEG, which we use for monitoring of brain signals, looking for ‘flashing’ activity between the two brain spheres, indicated by participating physiotherapists as a sign of a likely pre-event condition (Fig. 7.3).

Fig. 7.3

Emotiv EEG (a), sample brain activity (b)

This device offers an additional benefit for being used as a supplementary gaming interface, thus shifting patient’s perception from its use as a preventive device to enjoying and using for controlling games with the ‘power of the mind’. It is not without a merit that Emotiv offers also a Unity3D support for its device, not to mention ongoing development of an even more powerful INSIGHT [13] sensor version.

Currently apart from searching for clues indicating pre-stroke risks and as ‘mouse’-like user interface, we use EPOC for establishing a correlation between the mental intention to move a limb and the physical action. By combining with data from EMG sensors we aim to detect cases when patient’s brain correctly issues a signal to, e.g. to move an arm, but the patient cannot do it, e.g. due to a broken nerve connection.

7.5.1 ‘Kinect Server’ Implementation

The principal user interface used to control games has been Microsoft Kinect, the Xbox version at first and then the Windows version when it has been first released in early 2012. Its combination of distance sensing with the RGB camera proved perfectly suitable for both full-body exercises (exploring its embedded skeleton recognition) as well as for near-field exercises of upper limbs. However since Kinect has not been designed for short-range scanning of partial bodies, the skeleton tracking could not be used and hence we had to develop our own algorithms that would be able to recognise arms, palms and fingers and distinguish them from the background objects. This has led to the development of the ‘Kinect server’ based on open source algorithms. The first implementation has used Open NI drivers, closed in April 2014 following the acquisition of PrimeSense by Apple [14], offering the opportunity for our software to be built for both MS Windows and Linux platforms.

The ‘Kinect Server’ has been a custom development from RFSAT Ltd in the StrokeBack project to allow remote connectivity to the Microsoft Kinect sensor and subsequently the use of the data from the sensor on a variety of devices, not normally supported natively by the respective SDK from Microsoft. Initial implementation of the Kinect Server has been based on Open NI drivers for Xbox version of the device, which has been later translated to a more general drivers supplied by Microsoft in their Kinect SDK version 1.7 and the subsequent versions.

The principle of its operation is that it is comprised of two components:

Server–side—operating on a software platform supported by the selected Kinect drivers, having a role of obtaining relevant data from the Kinect sensor and making it available in a suitable form via network to connected clients.

Client–side—operating on any platform where WEB browsers with embedded Java Scripts are supported. This means almost any networked device, including tablets and a variety of smartphones, even Smart TVs, and other devices.

The types of information made available to by the server to clients include both RGB and depth map as images as well as the list of detected objects. Customisations include for example such features as the limitations of the visibility (detection) area, thus allowing to reduce clutter from nearby objects, focus on the selected object (e.g. the central or the closest one) and other ones.

Two modes of operation have been anticipated in order to enable its use for rehabilitation training in the project (refer to Fig. 7.4). In case that the persistent network connectivity can be maintained, the server part could operate on the home gateway, the client on a game console while the game server (game repository and management of game results for each user) remotely on the same server that hosts the PHR platform. In such an approach home client would not need to bother about updating games to latest versions or managing results. However, in case that network connectivity may or may not be secured, then the game server would need to be hosted locally on a home gateway, alongside the Kinect Server.

Fig. 7.4

Network operational modes of the ‘Kinect Server’

Since server has been implemented as a generic enabler, a supplementary implementation of gesture recognition geared to the Kinect Server has been implemented. In order to achieve wide interoperability on a number of devices and operating systems, an approach using Python script ‘palm_controls’ has been selected with a purpose of detecting specific gestures and mapping them to a custom keystrokes and mouse actions. The list of features and calling syntax is shown below:

palm_controls <server IP> <server PORT> options

Sever IP—network address where Kinect Server is hosted

Server PORT—network port on which the server listens for connections

Options:

‘-lHH –rHH –uHH –dHH -sHH’

Defines keys pressed for left, right, up, down and click actions

HH is a hexadecimal number from the Microsoft character table:

Choosing a zero (0) for a given key disables mapping of the given gesture

‘-x??? –X??? –y??? –Y??? –z??? –Z???’

This allows the 3D space where objects are detected.

Parameters define Xmin, Xmax, Ymin, Ymax, Zmin and Zmax, as given below:

Xmin & Xmax are given in pixels in the range 0–320

Ymin & Ymax are given in pixels in the range 0–240

Zmin & Zmax are given in millimeters, refer to a distance from the sensor

7.5.2 Embedded Kinect Server

The limitations of the Kinect in terms of the compatibility with certain Operating Systems, diversity of often-incompatible drivers and restrictiveness to high-end computing platforms have pushed us to investigating alternative ways of interacting with Kinect devices. This has led to the attempt to develop an ‘Embedded Kinect Server’ or EKS. Our idea was to use a microembedded computer like Raspberry PI [15] or similar and allow the client device that was running the game to access data from the EKS via local wireless (or wired) network. Such an approach would allow us to remove the physical connectivity restriction of the Kinect and allow 3D scanning capability from any device as long as it was connected to a network.

Various embedded platforms were investigated: Raspberry PI, eBox 3350 [16], Panda board [17] (Fig. 7.5) and many other ones. Tests have revealed an inherent problem with Kinect physical design that is shared between the Xbox and the subsequent Windows version that is the need to draw high current from USB ports in order to power sensors despite separate power supply still required.

Fig. 7.5

Embedded Kinect server deployment: Panda board (a) and physical prototype integrated with the MS Kinect for Windows sensor (b)

Hardware modifications of the Raspberry PI aimed to increase the current supplied to its USB ports, use of powered external USB hub and other work-around proved all unsuccessful. To date only the Panda Board proved to be the only embedded computer able to maintain the Kinect connectivity and running our EKS. In our tests we have managed to run the Mario Bros game on an Android smartphone and use Kinect wirelessly to control a game with patient’s wrists.

7.5.3 Rehabilitation Games Using the ‘Kinect Server’

The main features of our implementation offer the capabilities of restricting the visibility window, filtering the background beyond prescribed distance, distinguishing between separate objects, etc. This way we were able to implement the Kinect-based interface where following the requirements of our physiotherapists we replaced the standard keyboard arrows with gestures of the palm (up, down, left, right and open/close to make a click). Such an interface allowed for the first game-based rehabilitation of stroke patients suffering from limited hand control. The tests were first made with Mario Bros game where all controls were achieved purely with movements of a single palm. The algorithm for analysing wrist position and generating respective keyboard clicks has been developed initially in Matlab and then ported to PERL for deployment along with the Kinect server on an embedded hardware.

The algorithm is shown in Fig. 7.6. It is based on the idea that assuming that the wrist is placed steadily on a support (requirements from physiotherapists), the patients palm would always have fingers closer to the Kinect than the rest of a hand, this allowing easily to determine the palm position and direction the fingers point. Under such condition, we did not have to pay much attention to Kinect calibration and could avoid fixing the relative position of the hand support with respect to the Kinect device. This allowed us to remove the background simply by disregarding anything more distant than the average palm length centred on the centre of gravity (i.e. centre of the palm itself).