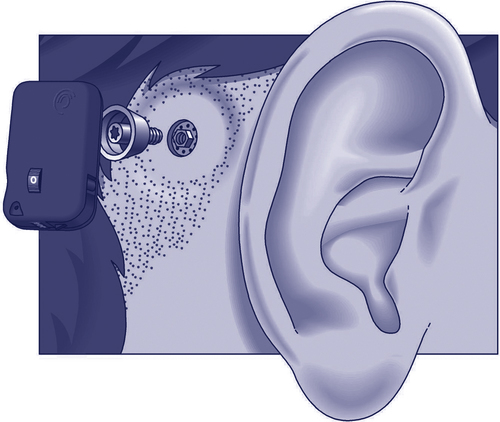

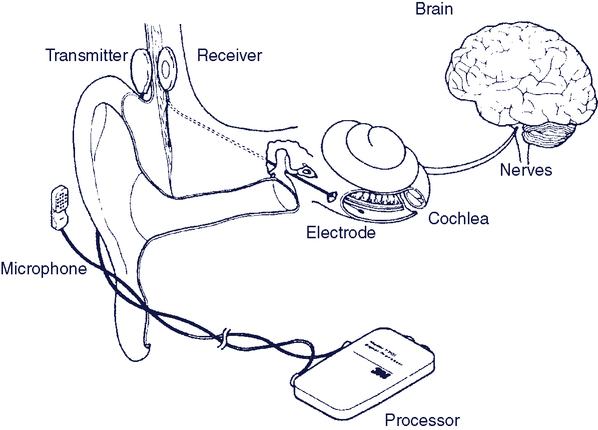

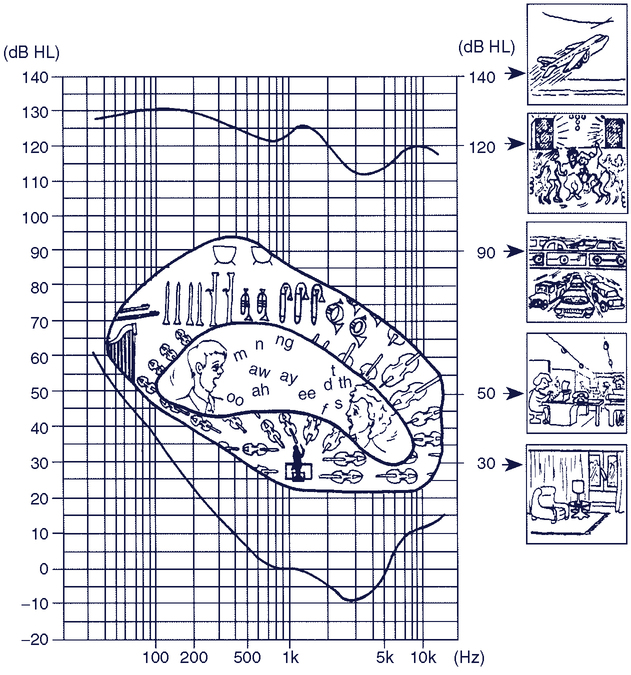

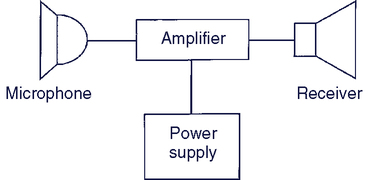

Upon completing this chapter, you will be able to do the following: 1. Describe the major types of hearing loss and their cause 2. Describe the types of hearing aids and their features 3. Describe how common devices can be adapted for use by a person who is hard of hearing or deaf 4. List ways that people who are hard of hearing or deaf can use telephones 5. Describe how computer outputs are adapted for individuals with auditory limitations 6. Discuss the major approaches used to support communication for individuals who are deaf and blind Auditory function can be measured in several ways. Auditory thresholds include both the amplitude and frequency of audible sounds. The amplitude of sound is measured in decibels (dB). The minimal threshold for hearing is 20 dB and is equivalent to the ticking of a watch under quiet conditions at 20 feet away. Figure 9-1 shows sound pressure levels for a variety of typical sounds.1 The typical range of frequencies that can be heard by the human ear is 20 to 20,000 hertz (Hz), but the ear does not respond equally to all frequencies in this range.13 There are several types of tests that audiologists use in assessing hearing. Pure tone audiometry presents pure (one-frequency) tones to each ear and determines the threshold of hearing for that person. The intensity of the tone is raised in 5-dB increments until it is heard; then it is lowered in 5-dB increments until it is no longer heard. The threshold is the intensity at which the person indicates that he or she hears the tone 50% of the time. Although the frequencies presented in the pure tone test are in the range of speech (125 to 8000 Hz), this test alone does not indicate the person’s ability to understand speech. To evaluate this function, the audiologist uses a speech recognition threshold test. In this evaluation, speech is presented, either live or recorded, at varying intensity levels, and the person’s ability to understand it is determined. The person is asked to repeat either words or sentences presented at these varying intensities. On the basis of these and other tests, the audiologist determines both the degree of hearing loss and the type of loss. Four types of hearing loss are typically defined.13 These are (1) conductive loss associated with pathological defects of the middle ear, (2) sensorineural loss associated with defects in the cochlea or auditory nerve, (3) centrally induced damage to the auditory cortex of the brain, and (4) functional deafness resulting from perceptual deficits rather than physiological conditions. Auditory impairment is considered slight if the loss is between 20 and 30 dB, mild if from 30 to 45 dB, moderate if from 60 to 75 dB, profound if from 75 to 90 db, and extreme if from 90 to 110 dB.13 Causes of hearing loss include congenital loss, physical damage, disease, aging, and effects of medications.13 These conditions can affect the outer, middle, or inner ear. In Chapter 8 we describe the fundamental approaches to sensory aids. Figure 8-1 applies to auditory as well as visual sensory aids. Augmentation of an existing pathway and use of an alternative pathway are the two basic approaches to sensory assistive technologies. When applied to the auditory system, the alternative pathways are tactile and visual. We discuss each of these approaches in this chapter. There are two alternate sensory pathways available to someone who is deaf. The most common example is the use of manual sign language and lip reading (visual substitution for auditory). In Chapter 8 we discussed the fundamental differences among the tactile, visual, and auditory systems. The only tactile method for input of auditory information that has been successful is the Tadoma method employed by individuals who are both deaf and blind. In this method, used by Helen Keller, the person receives information by placing his hands on the speaker’s face, with the thumbs on the lips, index fingers on the sides of the nose, little fingers on the throat, and other fingers on the cheeks. During speech, the fingers detect movements of the lips, nose, and cheeks and feel the vibration of the larynx in the throat. Through practice, kinesthetic input obtained from these sources is interpreted as speech patterns. One reason for the success of this method is that there is a fundamental relationship between the articulators (reflected in the movements of the lips, nose, and cheeks) and the perceived speech signal, and this relationship is at least as important as the acoustic information (pitch and loudness) in the speech signal for individuals using the Tadoma method.10 Approximately 60% of the acoustic energy of the speech signal is contained in frequencies below 500 Hz.3 However, the speech signal contains not only specific frequencies of sound, but also the organization of these sounds into meaningful units of auditory language (e.g., phonemes), and over 95% of the intelligibility of the speech signal is associated with frequencies above 500 Hz. For this reason, speech intelligibility rather than sound level is often used as the criterion for successful application of hearing aids. Conventional hearing aids can be divided into two types: air conduction and bone conduction. All air conduction hearing aids deliver the hearing aid output into the listener’s ear canal. However, some people are unable to use air conduction hearing aids due to chronic ear infections or malformed ear canals. For these individuals, a bone conduction hearing aid is most appropriate. The most common type of bone conduction hearing aid is a BAHA (Bone Anchored Hearing Aid); see Figure 9-2. Inputs to this type of hearing aid are converted to mechanical vibrations that shake the skull and stimulate the receptors in the cochlea. BAHAs take advantage of the fact that, at a sensory level, it does not matter whether sounds come from an air conducted hearing aid or a bone conducted hearing aid.18 Air conduction hearing aids are available in several different configurations.19Figure 9-3 illustrates several commonly used types of aids. The major types of ear-level aids are behind-the-ear (BTE), in-the-ear (ITE), in-the-canal (ITC), and completely in-the-canal (CIC). Body-level aids are used in cases of profound hearing loss. The processor is larger to accommodate more signal processing options and greater amplification and is mounted at belt level. The body-level aid is usually used only when other types of aids cannot be used. BTE hearing aids fit behind the ear and contain all the components shown in Figure 9-4. The amplified acoustic signal is fed into the ear canal through a small ear hook that extends over the top of the auricle and holds the hearing aid in place. A small tube directs the sound into the ear through an ear mold that serves as an acoustic coupler. This ear mold is made from an impression of the individual’s ear to ensure comfort to the user, maximize the amount of acoustical energy coupled into the ear, and prevent squealing caused by acoustic feedback. When the mold is made, a 2-ml space is included between the coupler and the eardrum. A vent hole can also be added to an ear mold, which can add to acoustic feedback and distortion, as well as preventing the ear from being blocked. The vent hole allows sound to travel to the tympanic membrane directly. An external switch allows selection of the microphone (M), a telecoil (T) for direct telephone reception, or off (O). The MTO switch and a volume control are located on the back of the case for BTE aids. Some types of hearing aids amplify only high frequencies because the lower frequencies are within normal hearing limits. Hearing aids used for this situation are called open fit because they do not use an ear mold. They have a wire that runs to a speaker that fits into the ear canal but does not block out the sounds that are still heard normally. This type of aid is called a receiver in the canal (RIC). Open fit aids are often used for individuals whose hearing loss is due to aging. The ITE aid makes use of electronic miniaturization to place the amplifier and speaker in a small casing that fits into the ear canal. The faceplate of the ITE aid is located in the opening to the ear canal. The microphone is located in the faceplate. This provides a more “natural” location for the microphone as it receives sound that would normally be directed into the ear.19 External controls on the ITE include an MTO switch and volume control. The ITC is a smaller version of the ITE. The CIC type of hearing aid is the smallest, and it is inserted 1 to 2 mm into the canal with the speaker close to the tympanic membrane. Because this type does not protrude outside the ear canal, it is barely visible. Any controls for the aid are fit onto the faceplate of the ITE, ITC, and CIC types of aids. The basic components of analog and digital hearing aids are described by Cook and Polgar in Cook and Hussey’s Assistive Technologies: Principles and Practice.5 If there is damage to the cochlea of the inner ear, an auditory prosthesis can provide some sound perception. These devices, termed cochlear implants, have the components shown in Figure 9-5.6,15 As long as the eighth cranial nerve is intact, it is possible to provide stimulation via implanted electrodes. Cochlear implants have been shown to be of benefit to adults and young persons who have adventitious hearing loss (i.e., hearing loss after acquiring speech and language).19 Significant benefits have also been reported for cochlear implants in young pre-lingual children.2,20 The main distinguishing characteristics, shown in Box 9-1, are discussed in depth by Loizou (1998)11 and summarized in this section. There are two major parts of most cochlear implants.16 External to the body are a microphone (environmental interface), electronic processing circuits that extract key parameters from the speech signal, and a transmitter that couples the information to the skull. The implanted portion consists of an electrode array (1 to 22 electrodes), a receiver that couples the external data and power to the skull, and electronic circuits that provide proper synchronization and stimulation parameters for the electrode array. Surgical procedures consist of insertion of the electrode array into the cochlea and implantation of the internal components and linking antenna for transcranial transmission of data and power. After the implant is inserted, a period of one month or so is allowed for healing and a process of “switch-on and tuning” is carried out (Ramsden, 2002).16 Two thresholds are measured: minimal perception of sound and the level at which the sound just ceases to be comfortable. Then the electrode array is tested and signal processing is applied. Current commercial approaches to cochlear implants differ in several important respects. User evaluation research is summarized in Box 9-2.

Sensory Aids for Persons with Auditory Impairments

Auditory function

Hearing loss

Fundamental approaches to auditory sensory aids

Use of Alternative Sensory Pathway

Tactile Substitution

Aids for persons with auditory impairments

Hearing Aids

Types of Hearing Aids

Cochlear Implants

Sensory Aids for Persons with Auditory Impairments