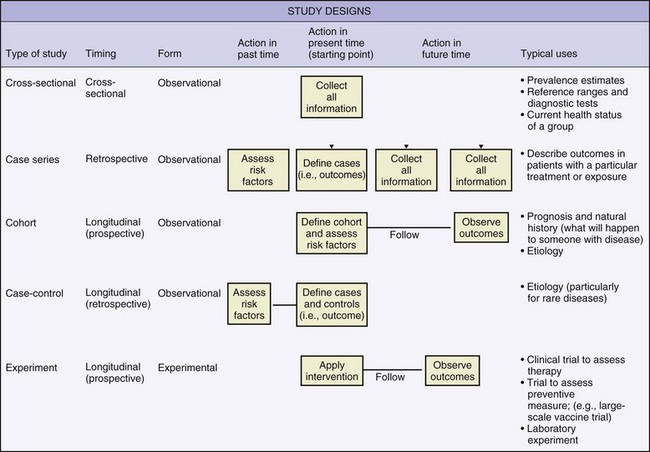

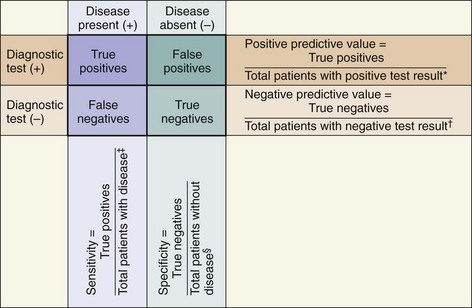

Chapter 13 SECTION 2 COMMON RESEARCH DESIGNS AND research TERMINOLOGY IV. Observational Research Designs VI. Experimental Research Designs VII. Potential Problems with Research Designs SECTION 3 THE LEVELS OF EVIDENCE IN ORTHOPAEDIC RESEARCH SECTION 4 CONCEPTS OF EPIDEMIOLOGY SECTION 5 STATISTICAL METHODS FOR TESTING HYPOTHESES SECTION 6 IMPORTANT CONCEPTS IN RESEARCH AND STATISTICS A Designed to assess outcomes occurring forward in time B Exposure has occurred or risk factor has developed; patients are monitored forward in time to determine the occurrence of an outcome of interest. A Designed to assess outcomes that have already occurred or data that has been collected in the past IV OBSERVATIONAL RESEARCH DESIGNS These designs can be prospective, retrospective or longitudinal (Figures 13-1 and 13-2). Common observational designs are as follows: 1. Descriptions of unique injures, disease occurrences, or outcomes in a single patient 2. No attempts at advanced data analysis are made. 3. Cause-effect relationships are not discussed, and generalizations are not made. 1. Outcomes are measured in patients with a particular disease or injury. 2. In orthopaedic research, these studies are typically retrospective and involve a thorough review of medical records. 1. Outcomes measured in patients with a particular disease or injury are compared with outcomes in a control group (see subsection VII, Potential Problems with Research Designs, for more information about control groups). 2. Odds ratios (not relative risks) are appropriate measures of association from data collected in these study designs (see Section 4, Concepts of Epidemiology). 1. Groups of patients with a similar characteristic or similar exposure or risk factors are studied forward in time (prospective) or from existing data (retrospective). 2. Cohort studies are appropriate for estimating incidence of disease or injury and the relative risks. E Cross-sectional study designs 1. An instrument’s ability to accurately describe truth or reality 2. In a validation study, measurements recorded by a particular instrument are tested against a “gold standard” measure. 1. The ability to precisely describe a characteristic with repeated measurements 2. The precision of an instrument can be tested by different examiners on the same patient (interobserver reliability) or by the same examiner at consecutive times (intraobserver reliability). 3. The intraclass correlation coefficient is a common statistical method for testing the reliability or validity of an instrument. Values range from 0 to 1.0 (1.0 represents perfect accuracy or precision). C Clinical studies can be designed to determine superiority of one treatment over another, whether one treatment is no worse than another (noninferiority), or whether both treatments are equally effective (equivalency). D Clinical research can be designed to assess outcomes data that are reported by the patient (subjective) or collected by an examiner (objective). VI EXPERIMENTAL RESEARCH DESIGNS A Clinical trials: allocate treatments and track outcomes in order to test a specific hypothesis 1. The clinical trial that is the “gold standard” and is based on the highest level of evidence is the randomized controlled trial. B Parallel designs: Treatments are allocated to different subjects or patients in a random or nonrandom manner. C Crossover designs: Each subject receives or undergoes a series of treatments of each treatment condition being studied. VII POTENTIAL PROBLEMS WITH RESEARCH DESIGNS A Internal validity concerns the quality of a research design and how well the study is controlled and can be reproduced. External validity concerns the ability of the results to generalize to a whole population of interest. B Confounding variables are factors extraneous to a research design that potentially influence the outcome. Conclusions regarding cause-effect relationships may be explained by confounding variables, instead of by the treatment or intervention being studied and must therefore be controlled or accounted for. C Bias is unintentional, systematic error that threatens the internal validity of a study. Sources of bias include selection of subjects (sampling bias), loss of subjects to follow-up (nonresponder bias), observer/interviewer bias, and recall bias. D Protection against these threats can be achieved through randomization (i.e., random allocation of one or more treatments) to ensure that bias and confounding factors are distributed equally among the study groups. Single blinding (examiner or patient is unaware of to which study condition the patient is assigned) or double blinding (both examiner and patient are unaware of assignment of study condition) is important for minimizing bias. E Control groups can help account for the potential placebo effect of interventions. 1. Control groups may receive an intervention that reflects the standard of care, no intervention, a placebo (i.e., inactive substance), or a sham intervention. 2. Control data may have been collected in the past (historical controls) or may occur in sequence with one or more other study interventions (crossover design). F Control subjects are often matched on the basis of specific characteristics (e.g., gender, age), which helps account for potential confounding variables that may influence the interpretation of research findings. G The strongest research design involves the use of random allocation, blinding, and use concurrent control subjects who are matched to the experimental group(s). A Evidence-based medicine (or evidence-based practice) aims to apply evidence from the highest quality research studies to the practice of medicine. B Findings from the best designed and most rigorous studies have the greatest influence on decision making. A Prevalence is the proportion of existing cases or conditions of injuries or disease within a particular population. B Incidence (absolute risk) is the proportion of new injuries or disease cases within a specified time interval (a follow-up period is required). 1. The incidence can be reported with regard to the number of exposures. 2. Example: Of 100 athletes on a sports team, 12 experience a sports-related injury during a 10-game season; the incidence rate would be reported as 12 injuries per 1000 athlete exposures. D Relative risk (RR) is a ratio between the incidences of an outcome in two cohorts. Typically, a treated or exposed cohort (in the numerator of the ratio) is compared with an untreated or unexposed (control) group (in the denominator of the ratio). Values can range from 0 to infinity and are interpreted as follows: 1. When RR = 1.0, the incidence of an outcome is equal between groups. 2. When RR > 1.0, the incidence of an outcome is greater in the treated/exposed group (higher incidence value in the numerator). 3. When RR < 1.0, the incidence of an outcome is greater in the untreated/unexposed group (higher incidence value in the denominator). E Odds ratios are calculated from the probabilities of an outcome in two cohorts. 1. Odds ratios are well suited for binary data or studies in which only prevalence can be calculated. F Interpreting relative risk and odds ratio: 1. Odds ratio and relative risk values are interpreted similarly. 2. When outcomes of two groups are compared, a relative risk or odds ratio of 0.5 would indicate that the likelihood that the treated/exposed patients will experience a particular outcome is half that of the untreated/control group. 3. A value of 2.5 would indicate that the likelihood that the treated/exposed group will experience the outcome is 2.5 times higher than that of the untreated/control group. II CLINICAL USEFULNESS OF DIAGNOSTIC TESTS A 2×2 contingency table (Figure 13-3) can be used to plot the occurrences of a disease or outcome of interest among patients whose diagnostic test results were positive or negative. 1. “True positives”: the number of individuals whose diagnostic test yielded positive results and who actually do have the disease or outcome of interest 2. “True negatives”: the number of individuals whose diagnostic test yielded negative results and who actually do not have the disease or outcome of interest 3. “False positives”: the number of individuals whose diagnostic test yielded positive results but who actually do not have the disease or outcome of interest 4. “False negatives”: the number of individuals whose diagnostic test yielded negative results but who actually do have the disease or outcome of interest B Analysis of diagnostic ability

Research Design and Biostatistics

section 2 Common Research Designs and Research Terminology

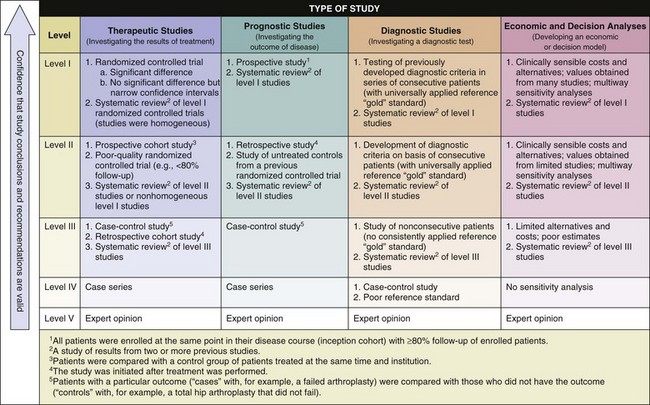

section 3 The Levels of Evidence in Orthopaedic Research

section 4 Concepts of Epidemiology

The likelihood of a positive test result in patients who actually have the disease or condition of interest (i.e., ability to detect true positives among those with a disease)

The likelihood of a positive test result in patients who actually have the disease or condition of interest (i.e., ability to detect true positives among those with a disease)

Calculated as the proportion of patients with a disease or condition of interest who have a positive diagnostic test:

Calculated as the proportion of patients with a disease or condition of interest who have a positive diagnostic test:

Sensitive tests are used for screening because they yield few false-negative results. They are unlikely to miss an affected individual.

Sensitive tests are used for screening because they yield few false-negative results. They are unlikely to miss an affected individual.

When the result of a highly sensitive (Sn) test is negative (N), the condition can be ruled “OUT” (mnemonic: “SnNOUT”).

When the result of a highly sensitive (Sn) test is negative (N), the condition can be ruled “OUT” (mnemonic: “SnNOUT”).

The likelihood of a negative test result in patients who actually do not have the disease or condition of interest (i.e., ability to detect “true negatives” among those without a disease)

The likelihood of a negative test result in patients who actually do not have the disease or condition of interest (i.e., ability to detect “true negatives” among those without a disease)

Calculated as the proportion of patients without a disease or condition of interest who have a negative test result:

Calculated as the proportion of patients without a disease or condition of interest who have a negative test result:

Total number of patients without the disease or condition of interest = “true negatives” + “false positives”

Total number of patients without the disease or condition of interest = “true negatives” + “false positives”

Specific tests are used for confirmation because they yield few false-positive results and are therefore unlikely to result in unnecessary treatment of a healthy individual.

Specific tests are used for confirmation because they yield few false-positive results and are therefore unlikely to result in unnecessary treatment of a healthy individual.

When the result of a highly specific (Sp) test is positive (P), the condition can be ruled “IN” (mnemonic: “SpPIN”).

When the result of a highly specific (Sp) test is positive (P), the condition can be ruled “IN” (mnemonic: “SpPIN”).

The proportion of patients with a positive test result actually has the disease or condition of interest

The proportion of patients with a positive test result actually has the disease or condition of interest

Calculated as the proportion of patients who have a positive test result and actually do have the disease of interest (i.e., the disease is correctly diagnosed with a positive test result):

Calculated as the proportion of patients who have a positive test result and actually do have the disease of interest (i.e., the disease is correctly diagnosed with a positive test result):