Female participation in running is at a historical high. Special consideration should be given to this population, in whom suboptimal nutritional intake, menstrual irregularity, and bone stress injury are common. Immature athletes should garner particular attention. Advances in the understanding of the Triad and Triad-related conditions have largely informed the approach to the health of this population. Clinicians should be well versed in the identification of Triad-related risk factors. A multidisciplinary team may be necessary for the optimal treatment of at-risk runners. Nonpharmacologic strategies to increase energy availability in athletes should be used as first-line treatment.

Key points

- •

The adolescent years represent a vulnerable period because nutritional inadequacies and suboptimal bone accrual may have long-lasting consequences.

- •

Adequate intakes of calcium, vitamin D, and iron are of particular importance in female runners to optimize bone health and hematopoiesis.

- •

Female runners should strive to maintain an energy availability of 45 kcal/kg fat-free mass per day to avoid the downstream neurometabolic effects of menstrual dysfunction and low bone density.

- •

Recently released consensus guidelines and risk assessment tools may be helpful in stratifying individuals based on their risk of bone stress injury and other Triad-related sequelae.

- •

Nonpharmacologic therapy, such as nutritional intervention and activity modification, remains the mainstay of treatment of Triad-related conditions, with pharmacologic options reserved for severe or recalcitrant cases.

Introduction

The 1928 Summer Olympic Games marked the debut of female competition at the highest level of track and field. However, the historic occasion was marred by sensationalized accounts of female runners strewn across the finish line in prostration after completing the 800-m run. Such attitudes were informed by cultural norms and pseudoscience, and severely limited women’s participation in athletics well into the second half of the twentieth century. The International Olympic Committee (IOC) would not allow women to compete at distances greater than 200 m for another 32 years. A major obstacle was overcome in 1972 with the passage of Title IX, which set a precedent for the provision of equal opportunities for women and men in sport. However, major sporting bodies like the Amateur Athletic Union and the IOC were slow to offer tangible opportunities for female sports participation, particularly in distance running. Despite the slow start, female participation in running has increased greatly over the past few decades. In 2013, more than 40% of marathon finishers in the United States were female, compared with just 10% in 1980. At the 2012 Summer Olympic Games, women comprised nearly 50% of track and field athletes and outnumbered men in the marathon by 118 to 105. Girls at the youth level constitute the fastest-growing group of participants in organized sport, from 300,000 in 1972 to 3.2 million today. In terms of participation, women compare favorably with men at both the National Collegiate Athletic Association (NCAA) and high school levels ( Table 1 ), and track and field remains the most popular sport for high school girls, with nearly 500,000 participants in 2013 to 2014. Although coeducational participation may now be the norm in many sports, the sporting worlds of women and men remain distinct. Women of all ages continue to compete with dated societal ideals of form and behavior. At the professional level, female athletes generally garner fewer accolades and less lucrative compensation in the form of contracts, prize earnings, and endorsements than male counterparts. These issues exist alongside a biological and hormonal milieu that varies markedly over different life stages, posing additional challenges for active women. With these considerations, this article offers a perspective on health considerations in female runners, focusing on the importance of nutrition and medical concerns related to the female athlete triad (Triad).

| Cross-country | Outdoor Track | Indoor Track | |

|---|---|---|---|

| Female (HS) | 218,121 | 478,885 | 66,126 |

| Male (HS) | 252,547 | 580,321 | 73,650 |

| Female (NCAA) | 15,922 | 27,752 | 25,876 |

| Male (NCAA) | 14,218 | 27,514 | 24,785 |

Introduction

The 1928 Summer Olympic Games marked the debut of female competition at the highest level of track and field. However, the historic occasion was marred by sensationalized accounts of female runners strewn across the finish line in prostration after completing the 800-m run. Such attitudes were informed by cultural norms and pseudoscience, and severely limited women’s participation in athletics well into the second half of the twentieth century. The International Olympic Committee (IOC) would not allow women to compete at distances greater than 200 m for another 32 years. A major obstacle was overcome in 1972 with the passage of Title IX, which set a precedent for the provision of equal opportunities for women and men in sport. However, major sporting bodies like the Amateur Athletic Union and the IOC were slow to offer tangible opportunities for female sports participation, particularly in distance running. Despite the slow start, female participation in running has increased greatly over the past few decades. In 2013, more than 40% of marathon finishers in the United States were female, compared with just 10% in 1980. At the 2012 Summer Olympic Games, women comprised nearly 50% of track and field athletes and outnumbered men in the marathon by 118 to 105. Girls at the youth level constitute the fastest-growing group of participants in organized sport, from 300,000 in 1972 to 3.2 million today. In terms of participation, women compare favorably with men at both the National Collegiate Athletic Association (NCAA) and high school levels ( Table 1 ), and track and field remains the most popular sport for high school girls, with nearly 500,000 participants in 2013 to 2014. Although coeducational participation may now be the norm in many sports, the sporting worlds of women and men remain distinct. Women of all ages continue to compete with dated societal ideals of form and behavior. At the professional level, female athletes generally garner fewer accolades and less lucrative compensation in the form of contracts, prize earnings, and endorsements than male counterparts. These issues exist alongside a biological and hormonal milieu that varies markedly over different life stages, posing additional challenges for active women. With these considerations, this article offers a perspective on health considerations in female runners, focusing on the importance of nutrition and medical concerns related to the female athlete triad (Triad).

| Cross-country | Outdoor Track | Indoor Track | |

|---|---|---|---|

| Female (HS) | 218,121 | 478,885 | 66,126 |

| Male (HS) | 252,547 | 580,321 | 73,650 |

| Female (NCAA) | 15,922 | 27,752 | 25,876 |

| Male (NCAA) | 14,218 | 27,514 | 24,785 |

Growth and development

Young female athletes face formidable challenges, including increased nutritional requirements, myths about ideal body types that may foster disordered eating (DE), and the need to reconcile advice from a variety of sources, including coaches, trainers, peers, parents, and increasingly the media. An imbalance between the demands of sport and those of normal development can be a source of stress among young athletes, and can persist into adulthood. Hence, this article initially considers the growth and maturation of active girls.

Menarche

Widely considered the central event in pubescence, menarche is heralded by the onset of pulsatile gonadotropin-releasing hormone release and its downstream mediators: luteinizing hormone (LH), follicle-stimulating hormone, and estrogen. The mean age at menarche in the United States has recently been reported at 12.3 to 12.4 years, and there is considerable agreement that this represents an earlier onset compared with previous reports. The mechanisms underlying this trend are not clear, but may be related to changes in relative weight, ethnic composition, chemical exposure, and insulin resistance over time. Although cross-sectional and retrospective studies have suggested a delayed onset of menarche in athletes, there is likely considerable bias in these observations, because late-maturing girls in sport seem to be over-represented as they pass from childhood through adolescence.

Adolescent Growth Spurt

The growth spurt in girls is an early pubertal event, occurring soon after the initiation of breast development. Peak height velocity (PHV) occurs at 10.8 to 11.3 years, or about 2 years earlier than in boys, but can vary significantly based on pubertal timing (ie, earlier, average, or later). Age at PHV is not strongly correlated with adult stature. In most cases, height velocity in girls slows dramatically by 14 or 15 years, whereas some boys grow beyond 18 years. Sport participation itself does not seem to have an appreciable effect on vertical growth in healthy children.

Changes in Body Composition

Sex differences in fat-free mass (FFM), fat mass (FM), and body fat percentage (BF%) during childhood become more clearly defined during adolescence. By the end of high school, girls have nearly twice the BF% of boys, a proportion that seems to persist even at the level of elite adult runners. Maximum increases in FFM occur at age 13 years in girls, preceding boys by about 2 years. Unlike height, body composition can be influenced by athletic training, with increases in FFM and decreases in FM seen in athletes compared with nonathletes. Weight is low for height in female distance runners (compared with nonrunners) at all ages, although it is difficult to separate effects of training from changes related to normal growth and maturation.

Skeletal Maturity

The adolescent years represent a brief but critical window of opportunity for bone accumulation. Puberty-associated increases in growth hormone and insulin-like growth factor-1 mediate this process, as well as the actions of hormones, such as dehydroepiandrosterone, estrogen, and leptin. Bone acquisition is maximal in the years surrounding PHV, or about 11 to 15 years of age, during which 33% to 46% of adult bone content is accrued. Healthy adolescents of normal weight engaging in impact activities, such as soccer, generally have higher bone mineral density (BMD) than swimmers and athletes engaging in non–weight-bearing sports. However, long-term participation in competitive endurance running may attenuate these gains in adolescent athletes, particularly at the spine. Longitudinal data show that in healthy girls bone mass accumulation declines markedly by age 16 years, highlighting early adolescence as a vulnerable period during which interruptions in normal bone accumulation may have far-reaching consequences.

Strength and Performance Measures

Before age 14 years, boys and girls differ marginally in their performance on a variety of motor tasks, including running speed. With puberty, dramatic differences in neuromuscular agility and explosiveness emerge, largely as a result of a plateau in the motor performance improvements of girls. Aerobic performance trends similarly, whereby improvements in maximal oxygen uptake (V o 2max ) increase linearly from age 7 years to PHV, then plateau in girls, but not in boys. However, the magnitude of these differences seems to be mitigated in the endurance-trained population.

Preadolescence and Adolescence: A Vulnerable Period

The adolescent growth spurt represents a period of increased vulnerability to overuse injuries. Laboratory studies have shown that growth cartilage present during rapid phases of growth is less resistant to tensile, shear, and compressive forces than either mature bone or less mature prepubescent bone. Dissociation between bone matrix formation and bone mineralization during the growth spurt also results in diminished bone strength. Stress fractures seem to occur more frequently during the adolescent spurt as well, although prospective studies are lacking. Numerous cases of stress-related lower extremity physeal injuries involving young athletes have been reported in the literature, primarily from running-related activities. These injuries may result in leg-length discrepancy or angular malalignment of the affected leg, setting up the potential for long-term disability. The potential for catastrophic injury is highlighted by case reports of severe femoral neck stress fractures in female adolescent athletes.

Nutritional considerations

Nutrition is of paramount importance to female runners. Low energy intake (EI) can result in menstrual dysfunction, suboptimal BMD, increased risk of illness, and prolonged recovery from illness. Guidelines for macronutrient intake in adult athletes can be found in Table 2 . Of special consideration, vegetarian athletes may be at risk for low intakes of energy, protein, fat, and key micronutrients, such as iron, calcium, vitamin D, riboflavin, zinc, and vitamin B 12 . Consultation with a sports dietitian is recommended to avoid deficiencies.

| Carbohydrates | |

| General recommendations | 6–10 g/kg BW/d |

| During moderate-intensity exercise >1 h | 0.5–1.0 g/kg BW/h |

| After exercise | 1–1.5 g/kg BW within 30 min and again every 2h for 4–6 h |

| Protein | 1.2–1.7 g/kg BW/d |

| Fat | 1–2 g/kg BW/d or 20%–35% of total EI |

Patterns of Intake in Female Runners

Across a variety of sports, female athletes reportedly consume about 30% less energy and carbohydrate (CHO) per kilogram of body weight than male athletes in the same sport. Some investigators have attributed the large discrepancies between reported EI and measured energy expenditure of female endurance athletes with stable body weights to under-reporting of EI. However, under-reporting does not account for the widely observed neurometabolic effects of chronic energy deficiency in female endurance athletes. Edwards and colleagues, in a study of female endurance runners using 7-day food diaries and doubly labeled water, documented energy deficit in 9 of 9 subjects, with a mean deficit of 32%. An even greater proportion of female athletes (76%) were energy deficient in a larger heterogeneous study of endurance runners. Similar results have been found among groups of adolescent, NCAA, and elite distance runners. Deficits in protein (PRO) intake seem to be far less common.

Several investigators have related suboptimal EI in female runners with menstrual abnormalities, including luteal phase dysfunction (LPD) and amenorrhea. Amenorrheic runners have also been found to have lower resting metabolic rate (RMR) than matched, eumenorrheic counterparts, indicating an adaptive syndrome to conserve energy. Suboptimal fat intake, although less common than CHO deficiency, has been observed in runners with suboptimal EI, menstrual dysfunction, and stress fractures. In addition, suboptimal EI may be associated with certain patterns of cognitive dietary restraint.

Defining Low Energy Availability

The determination of energy status is an imperfect science. One preferred index of energy status, energy availability (EA), is defined as EI minus exercise energy expenditure (EEE) divided by kilograms of FFM. In controlled laboratory settings, this index has been significantly associated with changes in reproductive and metabolic hormone concentrations and markers of bone formation and resorption. Table 3 outlines methods of assessing variables in the calculation of EA. Physically active women should strive for an EA of at least 45 kcal/kg FFM/d to ensure adequate energy for normal physiologic functions. Many controlled experiments have identified 30 kcal/kg FFM/d as a critical threshold of EA, associated with detrimental changes in reproductive function and bone metabolism.

| EI | EEE | FFM |

|---|---|---|

| 3-d food log | Metabolic equivalent of task | Dual-energy X-ray absorptiometry |

| 4-d food log | Heart rate monitoring | Air displacement plethysmography |

| 7-d food log | Accelerometry | Bioelectrical impedance |

| 24-h recall | — | Skin fold caliper measurement |

| Food frequency questionnaire | — | — |

Intentional Versus Unintentional Underfueling

Unintentional underfueling may occur in athletes who do not realize that they need to increase their EI to match increased EEE from training activity; this commonly occurs in high school cross-country runners. These athletes typically do not have psychological reasons that interfere with their ability to comprehend the need to increase EI. In contrast, intentional underfueling occurs when athletes restrict their EI to improve appearance, fit preconceived ideals of body image, or enhance athletic performance; this can occur in the presence or absence of DE. In a study by Manore and colleagues, 62% of female athletes endorsed a desire to lose at least 2.3 kg (5 pounds), compared with 23% of male athletes. These athletes may show behaviors such as binge eating, purging, diuretic/laxative abuse, use of diet supplements, or compulsive exercise in excess of normal training. Although athletes seldom meet the criteria for a clinical eating disorder (ED), many show signs of DE and may strive to achieve extremely low weight. In runners, motivating forces typically include the desire to achieve a body type consistent with societal pressures and/or perceived sport-specific ideals.

Performance Considerations

A preponderance of research in endurance athletes has shown the short-term performance benefits of moderate-CHO to high-CHO diets, including the ability to fend off overtraining syndrome. Regarding fueling during endurance training, the American College of Sports Medicine (ACSM) recommends ingestion of 0.7 g/kg/h of CHO during exercise in a 6% to 8% solution, particularly for events greater than 1 hour. This intake translates to about 42 g of CHO, or 620 to 1000 mL (21–34 ounces) of sports beverage, per hour in a 60-kg athlete. These recommendations are supported by 2 recent meta-analyses that confirmed the performance benefits of CHO supplementation in this range for endurance performance. In high-level runners, an individualized nutritional strategy should be developed that is designed to deliver CHO at a rate that is commensurate with exercise intensity as well as the duration of the event. After exercise, the goal is to provide adequate fluids, electrolytes, energy, and CHO to accelerate glycogen resynthesis and promote an anabolic hormone profile to hasten recovery. A CHO intake of approximately 1.0 to 1.5 g/kg body weight (BW) during the first 30 minutes, and again every 2 hours for 4 to 6 hours, seems adequate to accomplish this goal.

Many runners and coaches attempt to alter body composition to improve performance. Although average body composition measures of athletes in various sports are commonly reported in the literature, these values cannot be extrapolated to individuals. Specific body composition ranges may be a useful tool for clinicians monitoring high-risk athletes, but they have little relevance to performance. A multidisciplinary team, including the athlete, coach, dietitian, and physician, should work together to optimize EA, and to recognize athletes with low body mass indices (BMIs) and/or low BWs as at risk for the Triad consequences. Although low BF% is not independently associated with menstrual dysfunction, low BMD, or stress fractures, it should be considered as a consequence of inadequate EI, and/or excessive exercise, and addressed accordingly. Weight loss, if desired, should take place in the off-season or before the competitive season with the support of a sports dietitian. Findings that should raise concerns are changes in menstrual status, recurrent illness or injury, or any signs of DE.

Micronutrient Intake

Athletes who maintain a negative energy balance put themselves at risk for deficiencies in micronutrients, of which iron, calcium, and vitamin D are particularly relevant for female runners. Recommended intakes of these nutrients are summarized in Table 4 .

| Age (y) | Iron (mg/d) | Calcium (mg/d) | Vitamin D (IU/d) a |

|---|---|---|---|

| 9–13 | 8 | 1300 | 600 |

| 14–18 | 15 | 1300 | 600 |

| 19–30 | 18 | 1000 | 600 |

| 31–50 | 18 | 1000 | 600 |

| 51–70 | 8 | 1200 | 600 |

| >70 | 8 | 1200 | 800 |

| Pregnant or Lactating | Iron (mg/d) | Calcium (mg/d) | Vitamin D (IU/d) a |

|---|---|---|---|

| 14–18 | 27 (pregnant) 10 (lactating) | 1300 | 600 |

| 19–50 | 27 (pregnant) 9 (lactating) | 1000 | 600 |

a Goals for vitamin D intake are based on dietary recommendations and are independent of the individual’s sun exposure.

Iron

Iron performs a vital role in hemoglobin synthesis and oxygen transport. Women are at greater risk of iron deficiency (ID) compared with men because of menstrual losses and decreased iron intake. In the United States, the prevalence of iron deficiency anemia (IDA) is 3% to 5% and iron deficiency without anemia (IDNA) is 16% in women of childbearing age. Athletes may be at particular risk because of increased losses caused by gastrointestinal (GI) bleeding, and reduced absorption caused by subclinical inflammation. Bioavailability of iron depends on several factors, including the individual’s iron status, the form of iron consumed (heme vs nonheme), and the presence of inhibitors, such as bran, polyphenols, and antacids. Meat consumption is a strong determinant of iron status and good sources of heme iron include lean meat, poultry, and seafood. Nonheme iron, found in white beans, lentils, spinach, and iron-enriched foods, is not as readily absorbed by the body. In a study of female runners, individuals consuming a modified vegetarian diet (<100 g red meat per week) showed 30% less iron bioavailability than those consuming a regular diet. In general, athletes with low EI (<2000 kcal/d) have been shown to be at increased risk for poor iron status. Koehler and colleagues, in a group of elite female athletes, found a mean iron intake of 13.8 ± 4.1 mg/d, with 63% below the recommended daily amount. In another study, a group of active women had poorer indices of iron status compared with sedentary controls, despite higher dietary iron intake. Female distance runners, even at the elite level, may be at particular risk for suboptimal iron status. Iron overload, typically resulting from chronic, high-dose supplementation, has been reported in runners, but is uncommon and more commonly observed in men.

Calcium

Adequate calcium status is important for optimal bone health. Disruptions in bone homeostasis lead to the gradual weakening of bone and may accelerate the onset of low bone mass or osteoporosis. Adequate calcium intake during childhood is paramount for peak bone mass, which is attained by about age 25 years. Suboptimal calcium intake is common in female athletes, especially in those for whom a drive for thinness leads to calorie restriction. Barrack and colleagues, in a study of female NCAA runners, reported intakes less than 1000 mg/d in 26% of subjects. Milk and milk products contribute substantially to calcium intake in the United States. Nieves and colleagues and Kelsey and colleagues found that, in young female runners, higher intakes of calcium, skim milk, and dairy products are associated with lower rates of stress fracture. Removing dairy products from the diet requires careful replacement with other food sources of calcium, including fortified foods. Although calcium supplements are widely available, recent evidence suggests a possible increased risk of cardiovascular events in postmenopausal women taking calcium supplements of 1 g/d. Until further studies examine this association, we recommend that most calcium intake should come from dietary sources.

Vitamin D

Adequate vitamin D status is important for health, preventing growth retardation and skeletal deformities during childhood, and decreasing the risk of osteoporosis and fracture later in life. Vitamin D deficiency causes muscle weakness. Vitamin D can be obtained from the diet, but few foods, apart from oily fish such as salmon, naturally contain vitamin D. A study of collegiate runners found that nearly 80% failed to meet recommendations for vitamin D intake, and those who did usually relied on supplements (Barrack MT, personal communication, 2015). Sunlight is a major contributor to vitamin D status, with 5 to 10 minutes of exposure to the arms and legs (depending on time of day, season, latitude, use of sunscreen, and skin pigmentation) resulting in the production of about 3000 international units (IU). Because of the angle of the sun, little or no vitamin D can be produced from November to February in areas above about 35° north latitude (eg, Los Angeles; Charlotte, NC). There is no consensus on optimal levels of vitamin D, although levels less than 20 ng/mL generally indicate deficiency. Levels between 20 and 29 ng/mL may indicate an insufficiency, and values greater than or equal to 30 ng/mL are generally considered adequate. Studies in athletes are few, but vary considerably with population, location, and the time of year. In a study of endurance runners, Willis and colleagues found 30% of subjects to be insufficient, although none were frankly deficient (<20 ng/mL). Low levels have been associated with stress fracture risk in female military recruits, although studies in athletes are lacking. More prospective studies are needed to evaluate the role of calcium and vitamin D intake in the prevention of bone stress injuries in athletes, particularly those participating in sports with greater incidences, such as distance running.

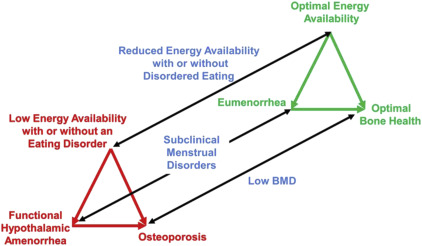

The female athlete triad

The Triad encompasses 3 components: (1) low EA with or without DE, (2) menstrual dysfunction, and (3) low BMD. Over the past few decades, increased understanding of the Triad has clarified the mechanisms correlating inadequate EI with hypoestrogenemia, decreased BMD, and subsequent increased fracture risk. Although early descriptions focused on the pathologic end points of the Triad (ED, amenorrhea, and osteoporosis), recent studies have increased recognition of subclinical disorders, such as DE, subclinical menstrual disturbances, low bone density, and bone stress injuries. Other medical complications of the Triad include endocrine, GI, renal, and neuropsychiatric manifestations.

Energy Availability

Chronic energy deficit disrupts hypothalamic neuroendocrine function in women, negatively affecting menstrual function and bone turnover. As a result, RMR is decreased, accounting for the ability of amenorrheic athletes to maintain weight stability. Muscular function may be negatively affected as well, because short-term energy deficit of just 5 days has been shown to reduce postabsorptive myofibrillar protein synthesis. Rates of bone formation are also suppressed within 5 days of decreasing EA from 45 to less than or equal to 30 kcal/kg FFM/d, and bone resorption may increase when EA is reduced enough to suppress estradiol. In a large sample of trained, exercising women, EA was also able to distinguish amenorrheic from eumenorrheic athletes. Hence, experts have recommended that exercising women should maintain an EA of at least 45 kcal/kg FFM/d to avoid the aforementioned clinical sequelae. Views regarding body image may be useful in the assessment of the runner, because a drive for thinness has been shown to be a proxy indicator of underlying energy deficiency. Monitoring athletes periodically during the year is prudent as well, because EA may change throughout the course of a season. It is worth noting that, contrary to popular belief, the stress of exercise itself, independent of EA, does not alter LH pulsatility in women.

Menstrual Status

Amenorrhea is the absence of menstrual cycles for more than 3 months and oligomenorrhea is characterized by menstrual cycles occurring at intervals longer than 35 days. Subclinical disturbances, such as LPD and anovulatory cycling, may have no perceptible symptoms. A diagnosis of functional hypothalamic amenorrhea (FHA) secondary to low EA in athletes is a diagnosis of exclusion and must follow an evaluation to rule out pregnancy and endocrinopathies. The prevalence of secondary amenorrhea, which varies widely with sport, weight, and training volume, has been reported to be as high as 65% in some studies of distance runners, compared with 2% to 5% in the general population. Amenorrhea in distance runners has been associated with increases in training mileage and decreases in BW, but not body fat per se, and seems to be less prevalent with increasing age. Subclinical menstrual disorders can be present in eumenorrheic athletes, with LPD or anovulation found in 78% of eumenorrheic recreational runners in at least 1 menstrual cycle out of 3.

Effects on Bone

Low BMD can be diagnosed via dual-energy X-ray absorptiometry (DXA) based on guidelines from the International Society of Clinical Densitometry and the ACSM. Exercise has beneficial effects on bone mineral accumulation during childhood and early adolescence, with 10% to 40% higher bone mass gains observed in physically active adolescents compared with sedentary individuals. However, the hypoestrogenic state that underlies menstrual dysfunction has adverse effects on bone, with lower BMD values seen in amenorrheic versus eumenorrheic athletes. DE is also associated with low BMD independently of menstrual irregularity in runners, and dietary restraint may be the DE behavior most associated with negative bone health effects in young runners. One systematic review of female athletes found the prevalence of osteopenia and osteoporosis in excess of what would be expected in a normal population distribution. In a study by Barrack and colleagues, nearly 40% of a sample of female adolescent runners had a Z-score less than −1 on DXA, which was approximately twice the proportion reported in a previous study of athletes representing multiple sports. Female adolescent runners, in particular, seem to show a suppressed bone mineral accrual pattern that may put them at risk for suboptimal peak bone mass. Tenforde and colleagues, in their cross-sectional study of adolescent runners, found that female runners with a BMI less than or equal to 17.5 kg/m 2 , or both menstrual irregularity and a history of fracture, were significantly more likely to have low bone mass. For adolescent boys, those with a BMI less than or equal to 17.5 kg/m 2 and the belief that thinness improves performance were significantly more likely to have low bone mass. In young runners who display low BMD at baseline, catch-up accrual may be difficult, highlighting the importance of adequate EA and neuroendocrine function during the adolescent years.

Relationship to Stress Fractures

Bone stress injury (BSI) results from chronic repetitive mechanical stress and exists on a spectrum from mild stress reaction to cortical fracture. BSIs represent a significant burden for female runners, with a reported incidence of up to 21% in competitive track athletes, and increased risk compared with male counterparts. Numerous studies have documented the relationship between amenorrhea and low bone mass and the risk for stress fractures. Bennell and colleagues, in a study of track athletes, found late age at menarche to be one of the strongest predictors of stress fracture risk. Recent findings by Barrack and colleagues, showing the cumulative risk conferred by multiple triad-related risk factors on bone health and susceptibility to fracture, have shown the important role that risk stratification may have in decreasing risk for injury. In this study, the highest risk was seen with a combination of greater than 12 h/wk of exercise, participating in a leanness sport/activity, and evidence of dietary restraint, which conferred a 46% risk of incurring a BSI (odds ratio, 8.7; 95% confidence interval, 2.7–28.3). Tenforde and colleagues also reported that the combination of late age at menarche, prior fracture, participation in dance/gymnastics, and BMI less than 19 kg/m 2 conferred the greatest risk of BSI in a study of adolescent runners. Female athletes diagnosed with BSI who are found to have menstrual irregularity should also be screened for DE and low BMD. A detailed discussion of BSI is beyond the scope of this review.

Screening

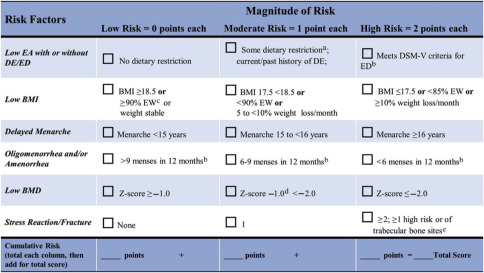

Screening for the Triad requires an understanding of the relationships among its components, the spectrum within each component, and rates of movement along each spectrum ( Fig. 1 ). The preparticipation physical and annual health examinations provide optimal screening opportunities, but other occasions may present when athletes are evaluated for issues such as menstrual dysfunction, BSI, or recurrent injury or illness. Athletes who present with one component of the Triad should be assessed for the others. A recent panel recommended the use of a risk-stratification tool that incorporates evidence-based risk factors for the Triad, assigning a point value in each Triad category based on risk magnitude ( Fig. 2 ).

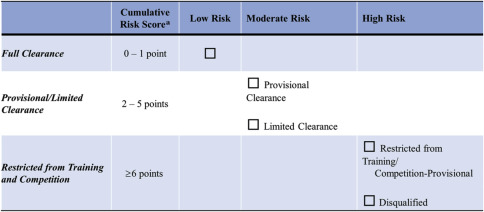

This protocol is then translated into clearance and return-to-play guidelines based on the athlete’s total score ( Fig. 3 ). Of note, a prior history of certain risk factors (eg, ED, oligomenorrhea/amenorrhea, or BSI) still confers risk points, even if the condition is not currently present. In addition to risk stratification, the team physician should use clinical judgment and take into account the athlete’s unique situation in making a decision for clearance and/or return to play. Table 5 shows the assessment of risk using this tool in 2 sample runners.

| Risk Factor | Athlete A | Athlete B | ||

|---|---|---|---|---|

| Comment | Score | Comment | Score | |

| Low EA with or without ED/DE | No dietary restriction | 0 | Mild restriction (vegetarian) | 1 |

| Low BMI | BMI 21 | 0 | BMI 18.1 | 1 |

| Delayed menarche | Menarche age 15 y | 1 | Menarche age 15 y | 1 |

| Oligomenorrhea/amenorrhea | At present eumenorrheic; no past history of menstrual dysfunction | 0 | 6 menses in the past 12 mo | 1 |

| Low BMD | No prior DXA | 0 | No prior DXA | 0 |

| BSI | History of second metatarsal shaft BSI | 1 | History of bilateral tibial BSI in high school | 2 |

| Total score | Moderate risk | 2 | High risk | 6 |

Stay updated, free articles. Join our Telegram channel

Full access? Get Clinical Tree