Fig. 21.1

Chronological representation of image guidance displays. Transparent templates of stereotactic grid coordinates were used in the pre-computer age. Early monochrome computer displays were used to for the presentation of stereotactic grid locations for a planned insertion trajectory in the 1970s [2]. Today, complex 3D representations of data are presented on high definition monitors and in the future, augmented reality will allow data to be displayed directly in the view of the patient without the need for a secondary display [3]

The displayed intraoperative view typically contains an augmented virtual representation of the surgical scene consisting of compilations of image data, 3D anatomical models of underlying anatomy of interest, locations of instruments, surgical plan data and measurement data such alignment angles and position, distances, volumes or functional information.

Whilst offering vast improvements over earlier display techniques and technologies, modern day computer monitors, like the earliest displays, continue to display data away from the situs. The separation and removal of data from the patient requires the diversion of sight and attention away from the patient reducing safety and inducing errors resulting from the mental alignment of the two scenes and from the hand eye coordination required to execute surgical tasks while following presented data. Augmented reality (AR), defined as the augmentation or supplementation of a real world view with real time computer generated information that is registered to the real world scene, allows feedback information to be alternatively displayed in the direct view of the patient.

Within this chapter, the principles and history of augmented reality displays are described. More specifically, a novel augmented reality approach employing overlay projection is presented. The design of the 3D overlay system and reports of its preliminary use in surgical applications is provided. Finally, challenges pertaining to the realization of 3D projection solutions a presented, along with a discussion of current research topics and a vision of the role that 3D projection, and augmented reality in general, are likely to play in orthopaedic surgery in the future.

History of Augmented Reality in Orthopedic Surgery

In the early 2000s, AR technologies for the display of image guidance data were first developed by a number of research groups. Primarily, systems developed by DiGioia [4], Blackwell [5], Masamune [6] and Stetten [7] and later Fichtinger [8] utilised semi-transparent monitors combined with half silvered mirrors to display 3D data directly in the view of the patient (Fig. 21.2). Masamune et al. and Stetten et al. presented overlay systems capable of displaying medical images above the patient, thus avoiding the need for sight diversion traditionally required to consult the medical images. Similarly, Blackwell et al. [5], Nikou et al. [9] and DiGioia et al. [10]a, described the benefits of applying semi-transparent AR displays to a range of navigated orthopaedic surgeries including arthroscopy, pelvic screw fixation and the positioning of the acetabular and femoral prosthetic implant components for total hip replacement surgery. The system allowed surgeons for the first time to view patient and computer generated anatomical models and guidance data in a single view by giving the illusion of 3D models floating immediately above the patient. The approach was initially promising but the need for obtrusive equipment around the surgical scene and the associated limited workspace, long setup times and complex calibrations prevented the widespread use of the approach in navigated orthopaedic or any other form of surgery.

Additionally, the significant distance between the display and the patient, rendered the technologies highly effected by parallax error. To correct the perspective of the 3D data for viewing angle, head tracking glasses were proposed, however, tracking of the user’s eye, which was computed from the location of tracked glasses, continued to be reported as a primary source or error.

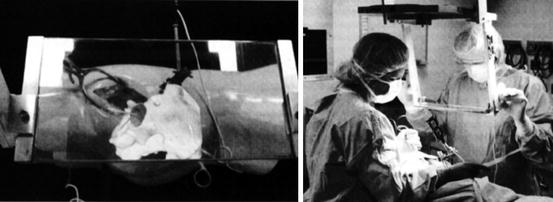

Fig. 21.2

The semitransparent augmented reality display as described by Nikou et al. employed in image guided orthopedic surgery

More recently, 3D overlay projection techniques have been investigated as a means of achieving AR visualization in surgical applications. The projection of light directly onto the patient provides an immersive fused scene whilst overcoming deficiencies in limited workspace, obtrusive equipment requirements, elaborate set up times and reduced surgical vision experienced by previous systems.

The use of standard data projectors for image guidance data visualisation was first explored by Sugimoto et al. in 2010 for the display of underlying organs and planned port locations for laparoscopic visceral surgery [11]. The projector was statically positioned above the patient and projected images of volume rendered patient anatomy were coarsely registered to the patient via manual alignment of the projected navel. Despite deficiencies in accuracy, integration and set up complexity, Sugimoto et al. highlighted the potential of 3D projection technology concluding that the image overlay assisted in the three dimensional understanding of anatomical structures leading to improved surgical outcomes resulting from reductions in operation time, intra-operative injuries and bleeding [11]. Augmented reality was found to aid in the determination of correct dissection planes and the localisation of tumours, adjacent organs and blood vessels. It has been predicted that such technology could be used to avoid injury to invisible structures and to minimize the dissection and resection of neighbouring tissues [12].

Projection Based Navigation

Building on the preliminary work of Sugimoto et al., a hand held and portable projection device which can display registered updated image guidance data, including the real time locations of tracked instruments, was developed in 2011 at the University of Bern, Switzerland [13]. The device, designed specifically for the augmented reality display of surgical image guidance data, took advantage of the recent miniaturisation of projection technologies and the introduction of commercially available laser projection devices. The device, which is connected to a surgical image guidance system in the same way as a standard monitor (VGA/DVI), is depicted in Fig. 21.3.

Fig. 21.3

Design of a portable 3D projection device for use with surgical image guidance systems

Miniaturisation of the projection technology has rendered this modern day device portable and handheld. By attaching a reference marker that can be tracked by a surgical navigation system’s tracking sensor, the projection content can be updated in real-time for the current projection volume, allowing it to be moved freely in the tracking working volume.

Unlike conventional projectors, the absence of optical projection lenses and the matching of laser spot size growth rate to the image growth rate, results in a projected image that is always in focus. The use of laser projection technology allows the handheld device to be used from any distance from a projection surface (providing that sufficient image size and intensity can be maintained) without the need to manually or automatically focus the image. The incorporated RGB laser projector technology, contains a video processor and a micro-electro-mechanical system (MEMS) actuated mirror which reflects the combined RGB laser output, producing an active scan cone of 43.7° × 24.6°. The projected images have a resolution of 848 × 480 pixels, a frame rate of 60 Hz and light intensity of 10 lm, bright enough to be visible in ambient light.

Accurate alignment of the virtual scene with the patient is the primary challenge pertaining to the creation of a projected augmented reality view during surgery. As the real view changes, or as objects of interest within the real scene change their position, the virtual scene must be updated, realigned, and displayed faster than the eye can detect. To facilitate the geometrically correct alignment of projected data with the patient, augmentation data such as virtual models of real anatomy, labels, or interactive measurements created from medical imaging data must be rendered in a virtual scene at the same view as the projected image.

This alignment firstly requires registration of the images to the patient as is performed in standard image guided procedures and additionally, calibration of the projection device in order to determine its view relative to its tracked position. A model of the transformations required for the projection devices functionality is graphically displayed in Fig. 21.4.

Fig. 21.4

Projection system functional model

Images for projection are rendered in a virtual image guidance scene at the view of the projection using a virtual camera model whose pose in the 3D virtual scene is defined by the relative pose of the projection and the patient. The patient-to-image registration process results in the transformation relating the patient to the position sensor ![$$ \tilde{M}=\left[X,Y,Z,1\right] $$](/wp-content/uploads/2016/09/A321277_1_En_21_Chapter_IEq1001.gif) and the 3D pose of the projection device reference marker within the surgical scene is tracked by the navigation system in the coordinate system of the position sensor (

and the 3D pose of the projection device reference marker within the surgical scene is tracked by the navigation system in the coordinate system of the position sensor (![$$ \tilde{M}=\left[X,Y,Z,1\right] $$](/wp-content/uploads/2016/09/A321277_1_En_21_Chapter_IEq1002.gif) ). The transformation defining the relationship between the origin of projection and the tracked reference marker

). The transformation defining the relationship between the origin of projection and the tracked reference marker ![$$ \tilde{M}=\left[X,Y,Z,1\right] $$](/wp-content/uploads/2016/09/A321277_1_En_21_Chapter_IEq1003.gif) is determined during a calibration process. The view of the virtual camera relative to the image data is defined by the projector’s field of view and image aspect ratio and its pose

is determined during a calibration process. The view of the virtual camera relative to the image data is defined by the projector’s field of view and image aspect ratio and its pose ![$$ \tilde{M}=\left[X,Y,Z,1\right] $$](/wp-content/uploads/2016/09/A321277_1_En_21_Chapter_IEq1004.gif) defined by Eq. (21.1).

defined by Eq. (21.1).

![$$ \tilde{M}=\left[X,Y,Z,1\right] $$](/wp-content/uploads/2016/09/A321277_1_En_21_Chapter_IEq1001.gif) and the 3D pose of the projection device reference marker within the surgical scene is tracked by the navigation system in the coordinate system of the position sensor (

and the 3D pose of the projection device reference marker within the surgical scene is tracked by the navigation system in the coordinate system of the position sensor (![$$ \tilde{M}=\left[X,Y,Z,1\right] $$](/wp-content/uploads/2016/09/A321277_1_En_21_Chapter_IEq1002.gif) ). The transformation defining the relationship between the origin of projection and the tracked reference marker

). The transformation defining the relationship between the origin of projection and the tracked reference marker ![$$ \tilde{M}=\left[X,Y,Z,1\right] $$](/wp-content/uploads/2016/09/A321277_1_En_21_Chapter_IEq1003.gif) is determined during a calibration process. The view of the virtual camera relative to the image data is defined by the projector’s field of view and image aspect ratio and its pose

is determined during a calibration process. The view of the virtual camera relative to the image data is defined by the projector’s field of view and image aspect ratio and its pose ![$$ \tilde{M}=\left[X,Y,Z,1\right] $$](/wp-content/uploads/2016/09/A321277_1_En_21_Chapter_IEq1004.gif) defined by Eq. (21.1).

defined by Eq. (21.1).