Abstract

Impairment of an individual’s ability to communicate is a major hurdle for active participation in education and social life. A lot of individuals with cerebral palsy (CP) have normal intelligence, however, due to their inability to communicate, they fall behind. Non-invasive electroencephalogram (EEG) based brain-computer interfaces (BCIs) have been proposed as potential assistive devices for individuals with CP. BCIs translate brain signals directly into action. Motor activity is no longer required. However, translation of EEG signals may be unreliable and requires months of training. Moreover, individuals with CP may exhibit high levels of spontaneous and uncontrolled movement, which has a large impact on EEG signal quality and results in incorrect translations. We introduce a novel thought-based row-column scanning communication board that was developed following user-centered design principles. Key features include an automatic online artifact reduction method and an evidence accumulation procedure for decision making. The latter allows robust decision making with unreliable BCI input. Fourteen users with CP participated in a supporting online study and helped to evaluate the performance of the developed system. Users were asked to select target items with the row-column scanning communication board. The results suggest that seven among eleven remaining users performed better than chance and were consequently able to communicate by using the developed system. Three users were excluded because of insufficient EEG signal quality. These results are very encouraging and represent a good foundation for the development of real-world BCI-based communication devices for users with CP.

1

Introduction

Cerebral palsy (CP) is a non-progressive condition caused by damage to the brain during early developmental stages . Individuals with CP may have a range of problems related to motor control, speech, comprehension, or other cognitive impairments. Around one quarter of individuals with CP have normal intelligence, but nevertheless are often classified as cognitively challenged as a result of their inability to communicate . Communication solutions are available; however, they strongly depend on motor activity and on the assistance of others. Brain-Computer Interfaces (BCIs) represent a possible alternative communication channel .

BCIs translate brain activity directly into action . Non-invasive BCIs are typically based on electroencephalographic (EEG) signals. To send messages, BCI users either focus on sensory stimuli (visual , auditory or somatosensory ) and generate evoked potentials (EPs) or perform, independently of any stimulus, specific mental imagery and induce transient changes in spontaneous EEG rhythms (Event-Related Desynchronization ). Such mental imagery includes, amongst others, motor imagery (the kinesthetic imagination of movement ), mental arithmetic, and the mental generation of words .

Within the framework of the FP7 Framework EU Research Project ABC, we recently started developing BCI technology for individuals with CP. The aim of the project is to develop assistive technology that improves independent interaction, enhances non-verbal communication and allows expression and management of emotions for users with CP. Several challenges have to be faced: Firstly, EEG sensor placement can be difficult due to body posture or head and neck support systems and will benefit from novel materials and sensor processes that are user-friendlier. Secondly, involuntary movements and spasms generate bioelectrical activity that lead to artifacts, which can produce misleading EEG signals or destroy them altogether . Ensuring high signal quality is essential . Thirdly, time-consuming BCI calibration processes need to be optimized. While EP-based BCIs typically achieve higher detection rates and require substantially less training time than imagery-based BCIs, which type will be most useful depends on residual motor and cognitive capabilities of the user. Major issues that impact the detection performance are the non-stationarity and inherent variability of EEG signals. Novel methods and user-group related protocols have to be developed that allow predicting robust control signals from EEG data. Fourthly, BCI training paradigms and instructions have to be adapted to the CP user’s individual capabilities and skills. Depending on situation and availability, individuals with CP are able to attend school or special training programs. Therefore, each user is different and information must be presented in a user-specific manner.

To ensure usability and functionality of our developments, we follow user-centered design principles . In this paper, we present our first prototype BCI and a corresponding communication application, and report results of a supporting online study in 14 end-users with CP.

2

Methods

2.1

User-centered system design adaptations

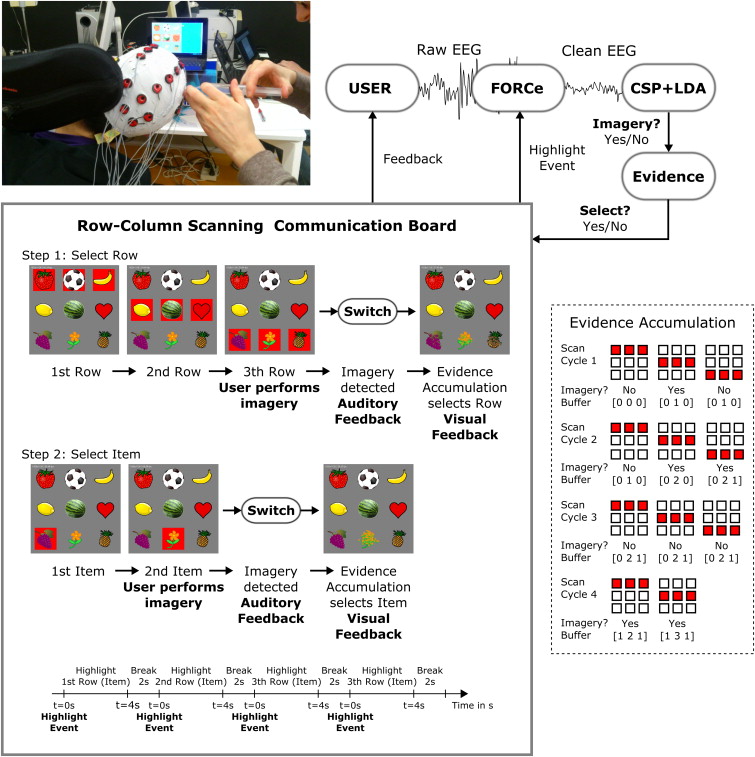

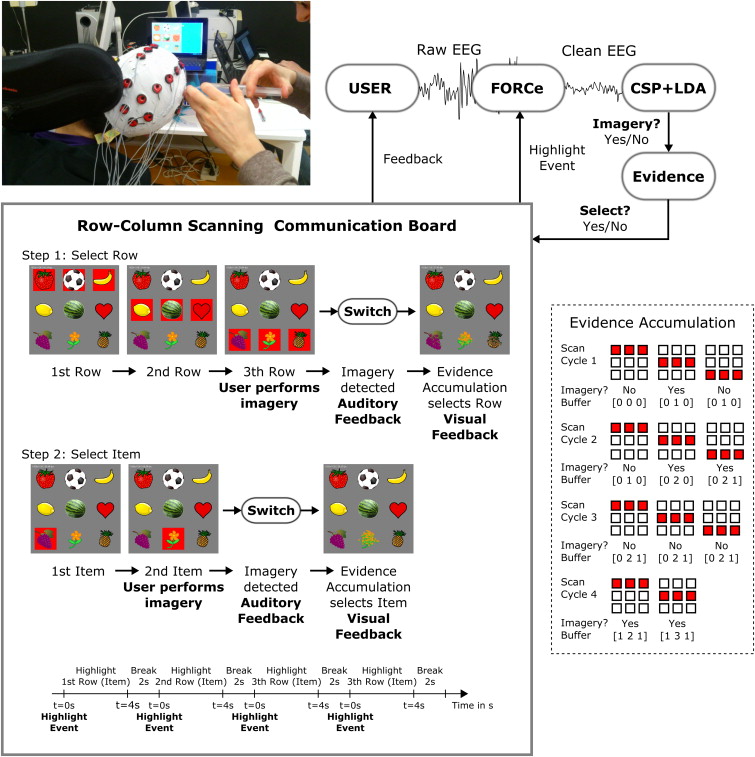

The BCI was designed and remodeled in several iteration steps according to the feedback received from adult CP users, relatives, caregivers and healthy test users. Firstly, we tested EP-based and imagery-based BCIs in CP users. We found that CP users could not utilize EP methods for a number of reasons, however, imagery-based methods were viable . Secondly, for communication we aimed at developing a communication application. Some individuals had previous experience operating row-column scanning communication boards such as The Grid Augmentative and Alternative Communication software (Sensory Software international, Malvern, UK). The Grid uses one-switch row-column scanning to select items that are arranged in a grid. Row-column scanning means that each row within the grid is sequentially highlighted until the user selects the row containing the desired item (for example, letters or icons, Fig. 1 ) by activating the switch. The columns within the selected row are then scanned until the target item is highlighted and can be selected by activating the switch a second time. Consequently, we aimed at developing a BCI that robustly generates a binary control signal for replacing the switch. To optimize communication speed, we first implemented a maximum-likelihood selection, i.e., letters that are most likely to be selected appear as the next available item. Using a dynamically adapted scanning protocol, however, was confusing for most users. Thus, we switched back to the slower but familiar row-column scanning mode. Thirdly, to concentrate on continuous feedback, which is typically used in BCI, was very demanding for the users. Therefore, we changed to discrete feedback ( Fig. 1 ). Users were only notified on whether imagery (equals switch activated) was detected while the current row (item) was highlighted or not. Fourthly, spelling words by selecting individual letters was very difficult for CP users. To facilitate selection, we used symbols and images representing the action to be performed. Fifthly, BCI training paradigms and BCI-based control are usually different, in that training does not provide feedback on detected imagery activity. This distinction was not transparent for users. Conventional training paradigms were moreover too abstract and boring for the user. Consequently, training and control paradigms were combined. Reducing graphical user interface (GUI) complexity makes instructions for the user simpler and less ambiguous.

Based on these specifications, and with the aim to overcome some of the challenges mentioned initially, we developed the current BCI system ( Fig. 1 ).

2.2

System architecture

The BCI has a distributed, modular architecture and was implemented by using open standards when possible. In the current implementation a Windows operating system (OS) based laptop computer was used for EEG acquisition and signal processing. Feedback and the application were presented on an Android OS tablet computer ( Fig. 1 ). BCI modules were implemented following the TOBI interface specifications . Communication between the BCI and the Android application was based on a specially developed ABC protocol. This allows users to interact with the application by means of other input signals and modalities developed within the ABC project (for example, standard human-computer interaction or inertial measurement devices). Operator computers can be used for monitoring and controlling experimental procedures.

2.3

The row-column scanning communication board

Fig. 1 shows a picture of the GUI. The screen was split into two parts. The left side contains the grid. On the right side feedback on the selected item was presented. Each row (item) was highlighted with a red colored box for a predefined time. The marker disappeared and after a short break the next row (item) was highlighted. When the last row (item) was reached, the marker jumped again to the first element and the sequence started again. The selection of a row (item) was reported back to the user visually by showing an animation sequence of the row (item) dissolving. Additionally, an auditory beep was presented. When an item was selected, the scan cycle started again from the first row. In this study a grid with three rows and three columns was used. Rows (items) were highlighted for 4 s with a 2-s break between the markers. Timing can be adapted to fit the user needs, when required. Items included a strawberry, soccer ball, banana, lemon, watermelon, heart, grapes, flower and pineapple ( Fig. 1 ).

2.4

The BCI switch

The switch for selecting row (item) was implemented by training the BCI to classify between imagery and non-imagery EEG patterns. Signal processing was performed with Matlab/Simulink (MathWorks, Natick, MA, USA). The standard method of common spatial patterns (CSP) was used to design class specific spatial filters in user-specific frequency bands, and Fisher’s linear discriminant analysis (LDA) classifier to classify the log-transformed normalized variance from 4 CSP projections (m = 2, ). The CSP method projects multi-channel EEG data segments from two classes into a low-dimensional spatial subspace in such a way that the variances of the time series are optimal for discrimination . LDA projects the CSP filtered signal onto a line and performs classification by thresholding in the projected one-dimensional space .

2.4.1

Fully online and automated artifact removal (FORCe)

Many BCI users with CP exhibit high levels of spontaneous movement. Therefore an automated method for the removal of electromyogram (EMG) artifacts was developed and integrated in the online BCI system. EEG signals are first decomposed via the Wavelet decomposition method (“Sym4” wavelet). Approximation coefficients (low frequency components) are again decomposed into independent components (IC) by second-order blind identification algorithm . Various criteria that characterize artifacts are applied to the IC. Criteria include the amount of temporal dependency within the signal, the amount of spiking activity, the kurtosis of the signal, the similarity of the power spectral density (PSD) distribution to a 1/f distribution (with f denoting the frequency), the PSD of the gamma band (> 30 Hz), the standard deviation and topographic distribution of the amplitudes, and peak values. ICs that do not meet the criteria represent artifacts and are excluded. Clean EEG is obtained by reconstructing the remaining ICs ( Fig. 1 ) .

Since the row-column scan mode provides visual cues, EEG signal analysis was synchronized to the marker onset ( t = 0 s, Fig. 1 , Highlight event). Four non-overlapping 1-second EEG segments S t = [t−1 t] s were extracted at t = 0, 1, 2, 3 s. The FORCe method was applied to each S t independently at time t. The reason for applying the method to 1-s segments was its high computational demand. Cleaning of 1-s segments took about 300 ms on a modern laptop. Hence, at second t = 3.3 s all segments were cleaned. Since discrete feedback was provided at t = 4 s, sufficient time was left for signal processing. Clean 1-s segments were concatenated to one 4-s segment and band-pass filtered in a user-specific frequency range. The first second of the filtered EEG was discarded to eliminate filter boundary effects. Log-transformed normalized variance from 4 CSP projections were computed from the remaining 3-s EEG segment and classified.

2.4.2

Evidence accumulation

Spasms or involuntary movement may prevent users from looking at the GUI – preventing interaction – or even induce EEG patterns that may mistakenly be interpreted as imagery. To reduce misinterpretation of EEG patterns, we implemented evidence accumulation. Users were asked to repeatedly confirm a selection before it was accepted by the BCI. More precisely, each row (item) scan step was classified, and the classifier output was stored in a ring buffer (size N) for each row (item). The highlighted row (item) was selected only when a certain number (m) of the last N classification outputs was classified as imagery class. Due to the sequential scan order, it takes at least m scan cycles to select a row (item). Fig. 1 shows an example row selection. To notify the user that imagery was detected while the current row (item) was highlighted an auditory beep was presented at the time of the marker-offset. A selection (m out of N imagery class detections) triggered the animation as described above ( supplementary video ). This approach increased the selection time, however, also the robustness of detection. This strategy, moreover, provides end-users on-demand access to an application.

2.5

BCI calibration and online item selection runs

Before the BCI can be operated, model parameters need to be adapted to fit user-specific EEG patterns. To this end EEG trials of mental imagery were recorded from users. The type of mental imagery was defined in agreement with the user prior to the calibration. The same experimental paradigm was used for BCI parameter calibration and online item selection: Users were asked to define a target item and to select it by performing the dedicated imagery each time the related row (item) was highlighted. Users were also asked not to perform the dedicated imagery when the target (row) item was not highlighted. During calibration, the BCI automatically presented, following m = 3 out of N = 5 evidence accumulation procedure, auditory beeps (sham feedback for imagery detection). More precisely, for each row (item) N = 5 scan cycles with m = 3 correct and N-m = 2 erroneous classifications were presented. The fifth cycle was always correctly selected and triggered the animation sequence for the row (item). The remaining two correct and two erroneous beeps were presented in random permutation order during cycles 1–4. Depending on the target item, 13–15 scan steps per row (item) were required. According to this scheme, correct beeps were presented in 54-60% of the time. This should simulate real-world “worst case” accuracies and train users to stay relaxed in the presence of erroneous BCI detections. Clean 3-s EEG segments belonging to classes’ imagery and non-imagery (available in a larger number due to the 3 × 3 grid) trials were used to train CSP filter and LDA classifier. A 10-times 10-fold cross validation statistic was used to estimate the discriminative performance. Since changes in spontaneous rhythms are user-specific, several frequency bands were evaluated and the best performing band was manually selected for each user individually. Frequency bands included 7–30 Hz, 8–12 Hz, 8–24 Hz, 8–36 Hz, 16–24 Hz, 16–36 Hz, 24–36 Hz. Identified parameters were used during online item selection runs to translate EEG patterns into click actions used to select target items by the row-column scan communication board.

2.6

End-user, signal recording and system performance evaluation

A supporting study in 14 users with CP was performed to evaluate the performance and usability of the implemented system. Experiments were performed at AVAPACE daycare centers in Valencia, Spain and at end-users’ homes in Tübingen, Germany. Institutional review board (IRB) ethical approval was obtained for all measurements. All participants gave informed, oral consent. In addition, written consent was obtained for every participant. In some cases, written consent had to be provided by the participants’ legal representatives. Details of participants are summarized in Table 1 . Three out of fourteen users had to be excluded due to technical problems that resulted in insufficient EEG quality. Some users were naïve to the task and did not receive BCI training before participating in this study.

| User | Gender | Age | GMFCS | CP type | Sensory disturbances | Dominant hand | Additional information |

|---|---|---|---|---|---|---|---|

| S1 | M | 27 | V | Dystonic-Spastic | – | None | Anarthria; requires sitting support for trunk control; no difficulty breathing; yes/no communication by tongue signs; sometimes w/help of pictograms |

| S2* | F | 20 | IV | Atypical-Spastic | – | Left | Light dysarthria |

| S3* | F | 25 | V | Dystonic-Spastic | – | Left | Anarthria; requires sitting support for trunk control; no difficulty breathing; yes/no communication to simple questions by phonemes |

| S4 | M | 53 | V | Dystonic | – | Left | Dysarthria; requires sitting support for trunk control; no difficulty breathing |

| S5 | F | 56 | IV | Dystonic | Myopia | Right | Dysarthria; uses AAC communicator with ARASAAC system on Tablet PC by touchscreen; no difficulty breathing |

| S6 | F | 38 | V | Spastic | – | Right | Anarthria; uses SAAC communicator with ARASAAC system on Tablet PC, row-column scanning, face switch; requires sitting support for the head and trunk control; uses electric chair with chin-joystick; no difficulty breathing |

| S7 | M | 40 | V | Dystonic-Spastic | – | Left | Anarthria; breathing difficulties; tracheotomy; neurogenic dysphagia; user AAC, PCS, over 100 pictograms, accessed by scanning assisted by the caregiver and bodily rather confirms; Sitting support |

| S8 | M | 33 | V | Spastic | – | Left | Anarthria; difficulties expectorating neurogenic dysphagia; requires sitting support; uses AAC, Bliss system, more than 300 pictograms, accessed by scanning and operated by facial muscle switch |

| S9 | M | 24 | IV | Dystonic | – | Right | Tetraplegic, right hand electric wheelchair control, can stand on a standing frame. Uses upper limbs to control a portable personal computer (PC) with a keyboard and Hermes PC software for communication |

| S10 | M | 34 | V | Spastic | Left side blindness and deafness | None | Anarthria; limited ability to keep head, trunk and limbs against gravity; can navigate autonomously using electric wheelchair with head control device Communication by writing tablet pc, scan and access switch in the head and speech synthesis |

| S11 | M | 39 | IV | Dystonic-spastic | – | Left | Anarthria; uses electric wheelchair indoors and outdoors short distances controlled by a rod next to the left cheek. Needs support to maintain the seated trunk. Uses AAC, Bliss system, 500 pictograms, the grid software in tablet pc and access scanning |

EEG was recorded from 16 electrodes placed over cortical areas according to the international 10-20 system. Electrode positions include AFz, FC3, FCz, FC4, C3, Cz, C4, CP3, CPz, CP4, PO3, POz, PO4, O1, Oz, O2. Reference and ground were placed at position Pz and P5, respectively. The g.GAMMAsys system with g.LADYbird active electrodes (Guger Technolgies, Graz, Austria) and one g.USBamp biosignal amplifier were used to record EEG at a rate of 512 Hz (Notch 50 Hz, 0.5-100 Hz band pass).

Participants were sitting in their wheelchairs. The tablet computer was placed about 80 cm in front of them at approximately eye level ( Fig. 1 ). Before each experiment, participants received instructions on the task to be performed (both in writing as a slideshow and verbally). Relatives and caregivers explained the aim of the study, how to use the speller application and how to operate the BCI. Hence, each user received individual instructions and explanations. The experiment consisted of the following steps: Firstly, a dedicated mental task was identified. Relatives and caregivers helped identifying appropriate tasks. Possible tasks included motor imagery, mental arithmetic and word generation . Secondly, calibration runs were recorded and BCI parameters computed. Calibration runs were repeated until binary classification was better than random . Thirdly, online item selection runs were performed. For both calibration and online target item selection runs users were asked to pick a target item before the run started. After each run the data was analyzed, and CSP and LDA were updated when performance increase was expected.

2.7

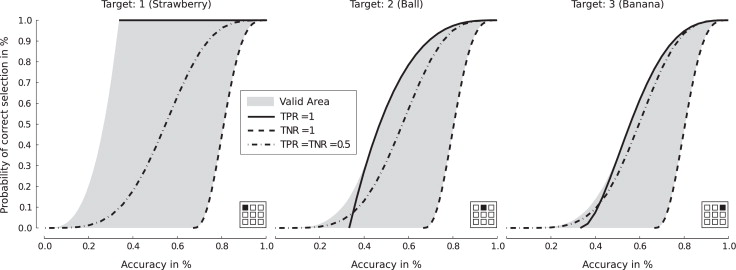

Evidence accumulation chance performance level

The accuracy, i.e., the sum of true positive (TP) and true negative (TN) detections divided by the total number of detections, is commonly used to characterize BCI performance. The evidence accumulation procedure, however, makes interpretation of achieved accuracy difficult. Consider two cases: Firstly, assuming the classifier always outputs OFF, no selection is made. However, since 2 out of 3 selections are correct, the resulting accuracy is high (two-third). Secondly, assuming the classifier always outputs ON then the first row (item) is always selected. The accuracy, for selecting the first item, however is low (one-third). Fig. 2 illustrates the relationship between accuracy and selection probability for different target items as function of true positive and true negative rate (TPR and TNR). For correct interpretation of the results, the performance of each item selection run was compared against random selection. More specifically, the probability that a random classifier selects the target item by using the same number of scan steps was calculated. Since there are infinitely many possible random classifiers, an unbiased classifier with TPR = TNR = 0.5 was chosen. The random level probability was computed by summing the probabilities of all correct selections that occur up to the number of scan steps required by the user. The probability that row and item are selected was computed by multiplying the probability by row and column selection. Low probability values ( P < 0.05) indicate that selection was unlikely caused by chance.

2

Methods

2.1

User-centered system design adaptations

The BCI was designed and remodeled in several iteration steps according to the feedback received from adult CP users, relatives, caregivers and healthy test users. Firstly, we tested EP-based and imagery-based BCIs in CP users. We found that CP users could not utilize EP methods for a number of reasons, however, imagery-based methods were viable . Secondly, for communication we aimed at developing a communication application. Some individuals had previous experience operating row-column scanning communication boards such as The Grid Augmentative and Alternative Communication software (Sensory Software international, Malvern, UK). The Grid uses one-switch row-column scanning to select items that are arranged in a grid. Row-column scanning means that each row within the grid is sequentially highlighted until the user selects the row containing the desired item (for example, letters or icons, Fig. 1 ) by activating the switch. The columns within the selected row are then scanned until the target item is highlighted and can be selected by activating the switch a second time. Consequently, we aimed at developing a BCI that robustly generates a binary control signal for replacing the switch. To optimize communication speed, we first implemented a maximum-likelihood selection, i.e., letters that are most likely to be selected appear as the next available item. Using a dynamically adapted scanning protocol, however, was confusing for most users. Thus, we switched back to the slower but familiar row-column scanning mode. Thirdly, to concentrate on continuous feedback, which is typically used in BCI, was very demanding for the users. Therefore, we changed to discrete feedback ( Fig. 1 ). Users were only notified on whether imagery (equals switch activated) was detected while the current row (item) was highlighted or not. Fourthly, spelling words by selecting individual letters was very difficult for CP users. To facilitate selection, we used symbols and images representing the action to be performed. Fifthly, BCI training paradigms and BCI-based control are usually different, in that training does not provide feedback on detected imagery activity. This distinction was not transparent for users. Conventional training paradigms were moreover too abstract and boring for the user. Consequently, training and control paradigms were combined. Reducing graphical user interface (GUI) complexity makes instructions for the user simpler and less ambiguous.